Kubeflow on EKS

Kubeflow

Kubeflow ↔ EKS Version Compatibility: https://awslabs.github.io/kubeflow-manifests/docs/about/eks-compatibility/

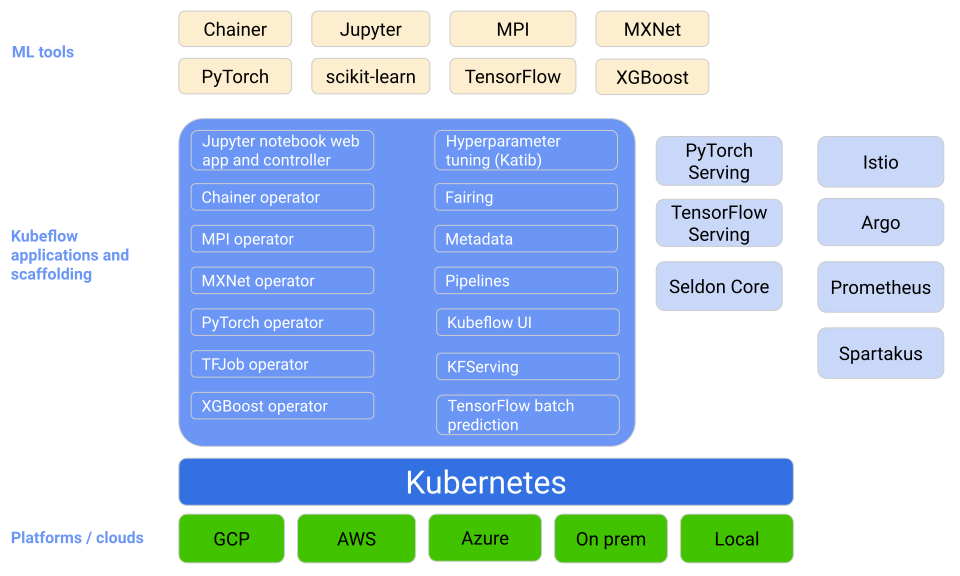

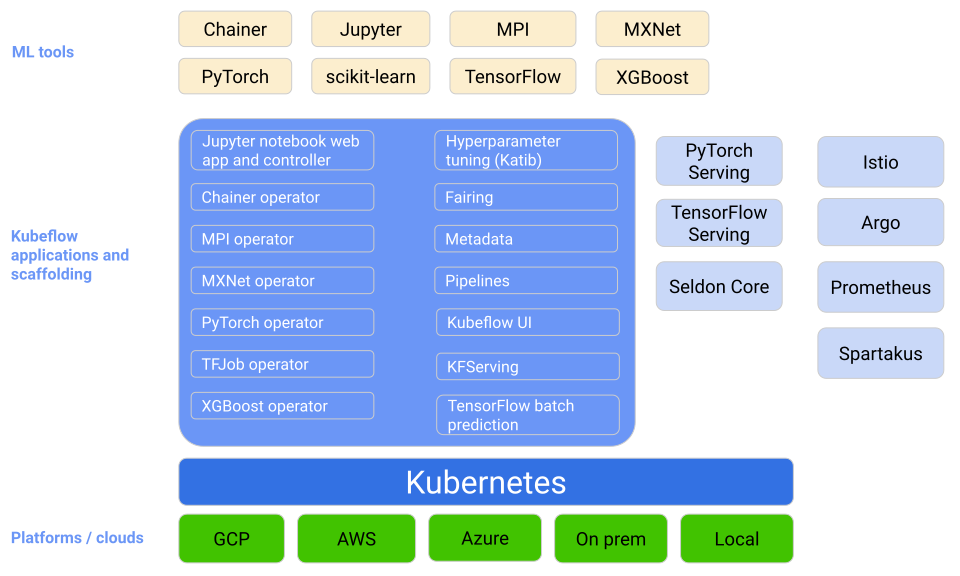

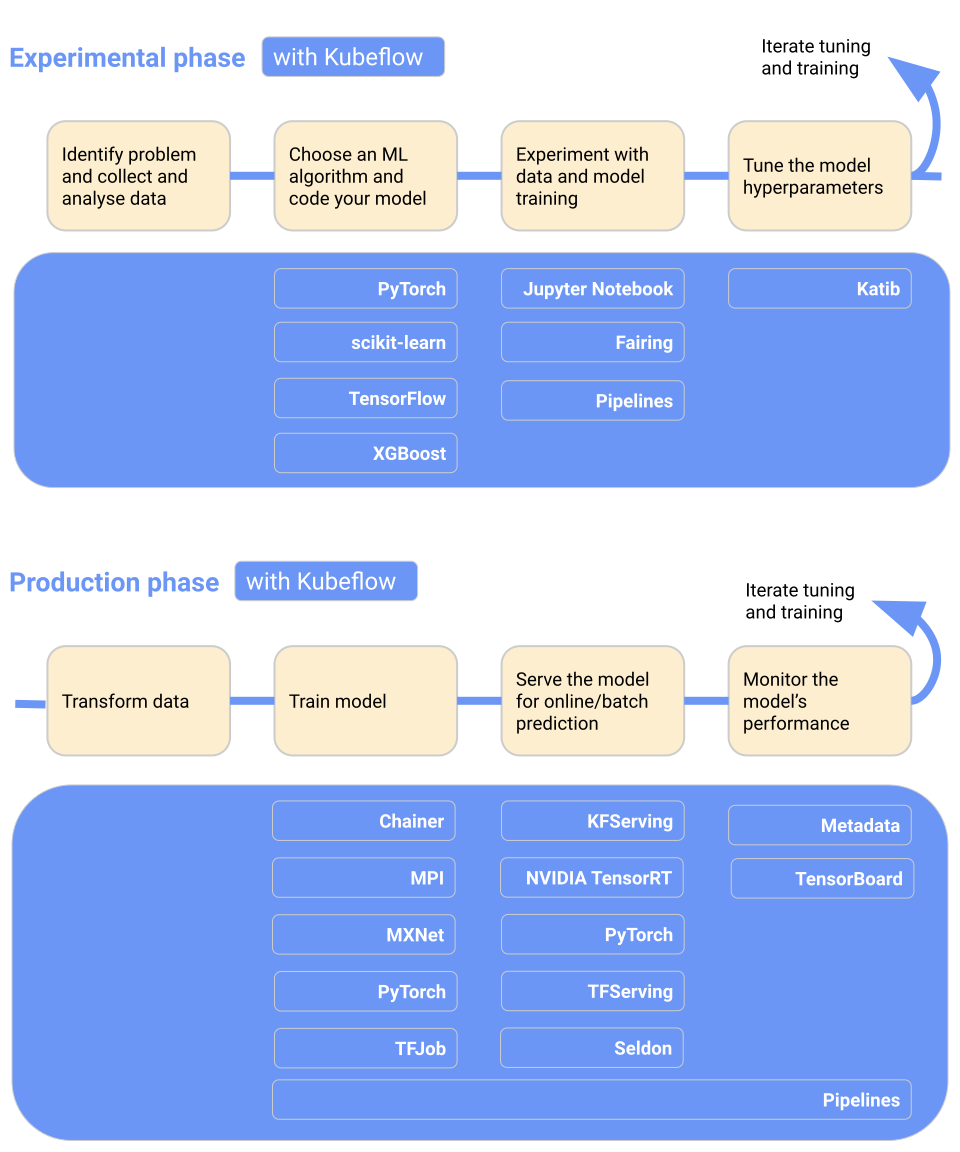

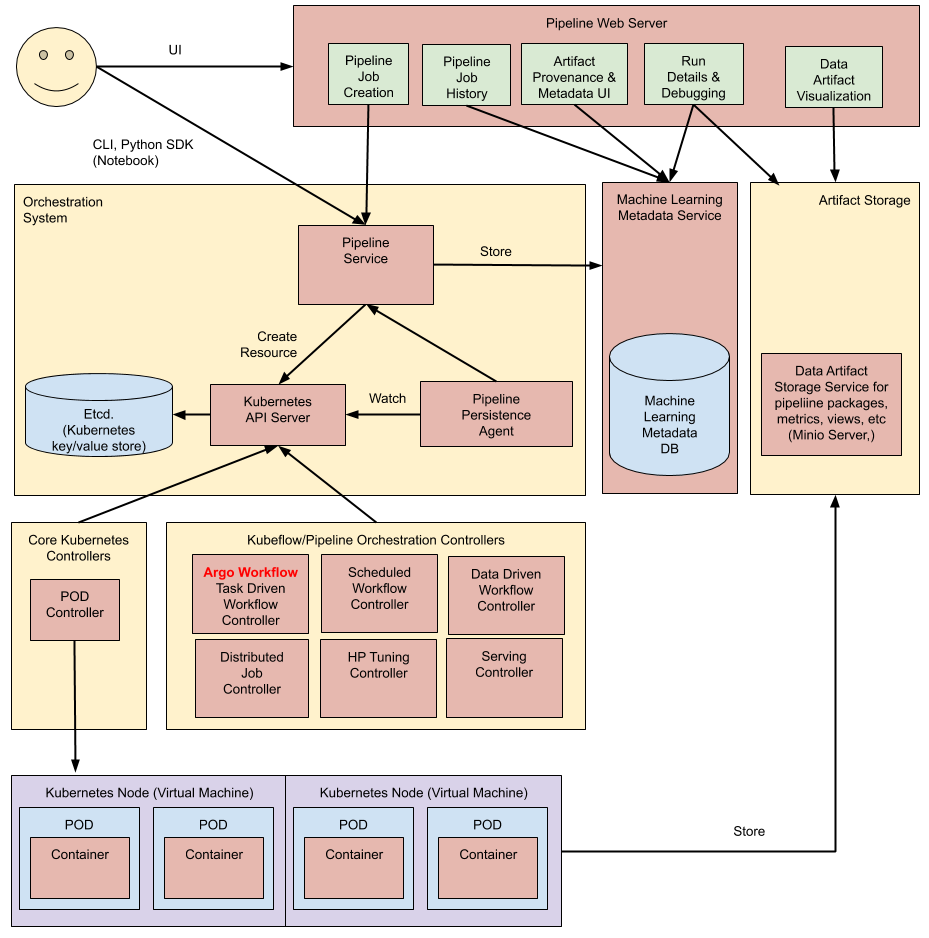

Kubeflow is an ecosystem that leverages Kubernetes, a popular container orchestration platform, to provide a unified and scalable environment for machine learning operations. It allows data scientists and machine learning engineers to work on their models and workflows in a consistent, cloud-native manner, ensuring reproducibility and scalability.

Kubeflow started as an open sourcing of the way Google ran TensorFlow internally, based on a pipeline called TensorFlow Extended. It began as just a simpler way to run TensorFlow jobs on Kubernetes, but has since expanded to be a multi-architecture, multi-cloud framework for running end-to-end machine learning workflows.

Key Components of Kubeflow:

- Pipelines: Kubeflow Pipelines is a crucial component that enables you to create, manage, and orchestrate machine learning workflows in a visual and code-free manner. It allows you to define, run, and monitor pipelines, making collaboration and automation easier.

- Katib: Katib is the hyperparameter tuning component of Kubeflow. It automates the process of tuning hyperparameters for your machine learning models, saving time and resources. It can integrate with various machine learning frameworks.

- Training Operators: Training Operators in Kubeflow simplify the deployment and management of machine learning workloads. You can use them to train and serve models, and they come with pre-configured resources for various ML frameworks.

- Kubeflow Serving: This component is designed to serve machine learning models in a production-ready manner. It provides model versioning, scaling, and monitoring capabilities, making it easier to deploy models as RESTful API endpoints.

- Metadata Store: The Metadata Store is a critical component for tracking and managing the lineage and metadata of machine learning experiments. It helps ensure that you can trace the history of a model and its data for reproducibility and compliance.

- UI and JupyterHub: Kubeflow provides a user-friendly web-based UI for managing pipelines and other components. JupyterHub integration allows data scientists to develop and run experiments in Jupyter notebooks within the Kubeflow environment.

Kubeflow Architecture

Kubeflow Components

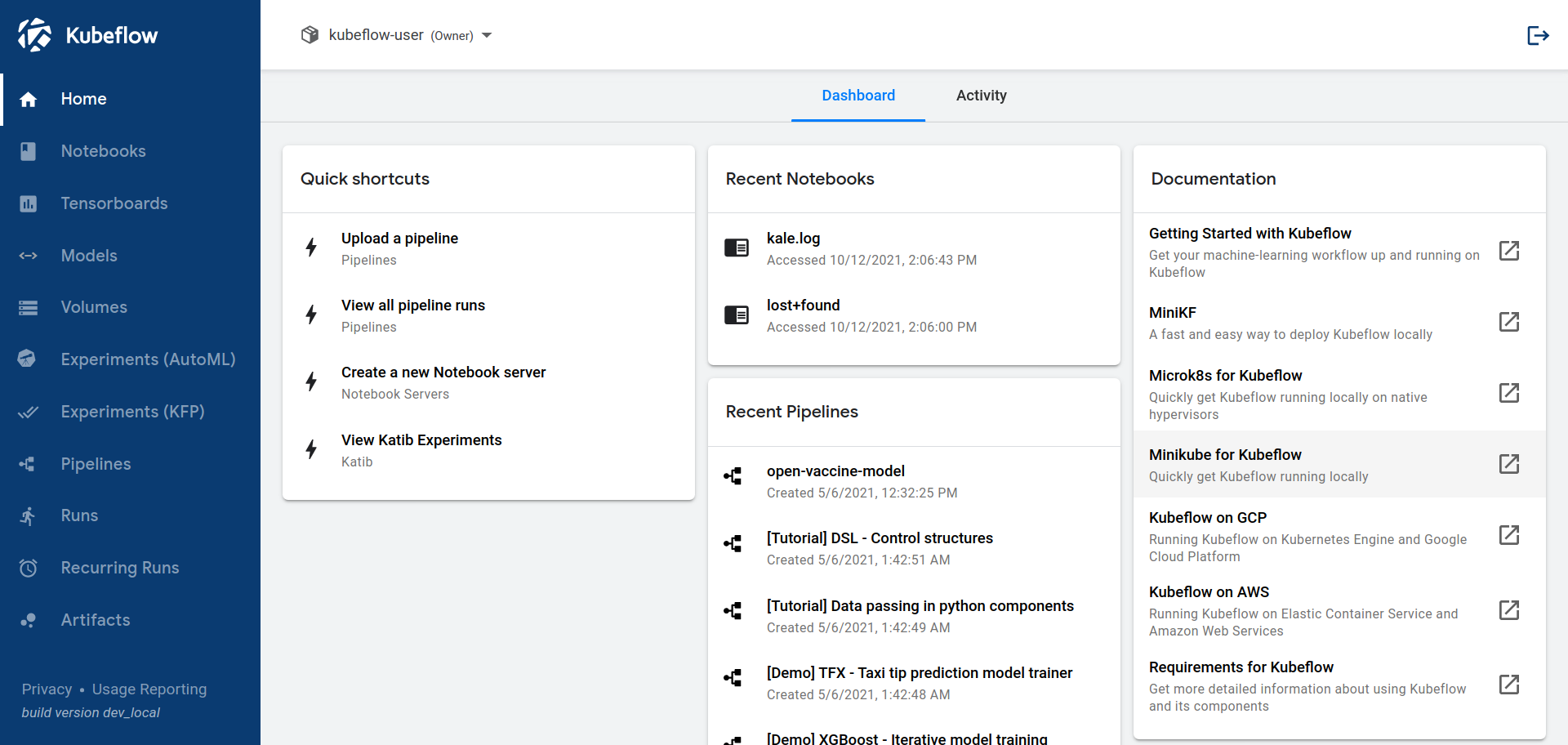

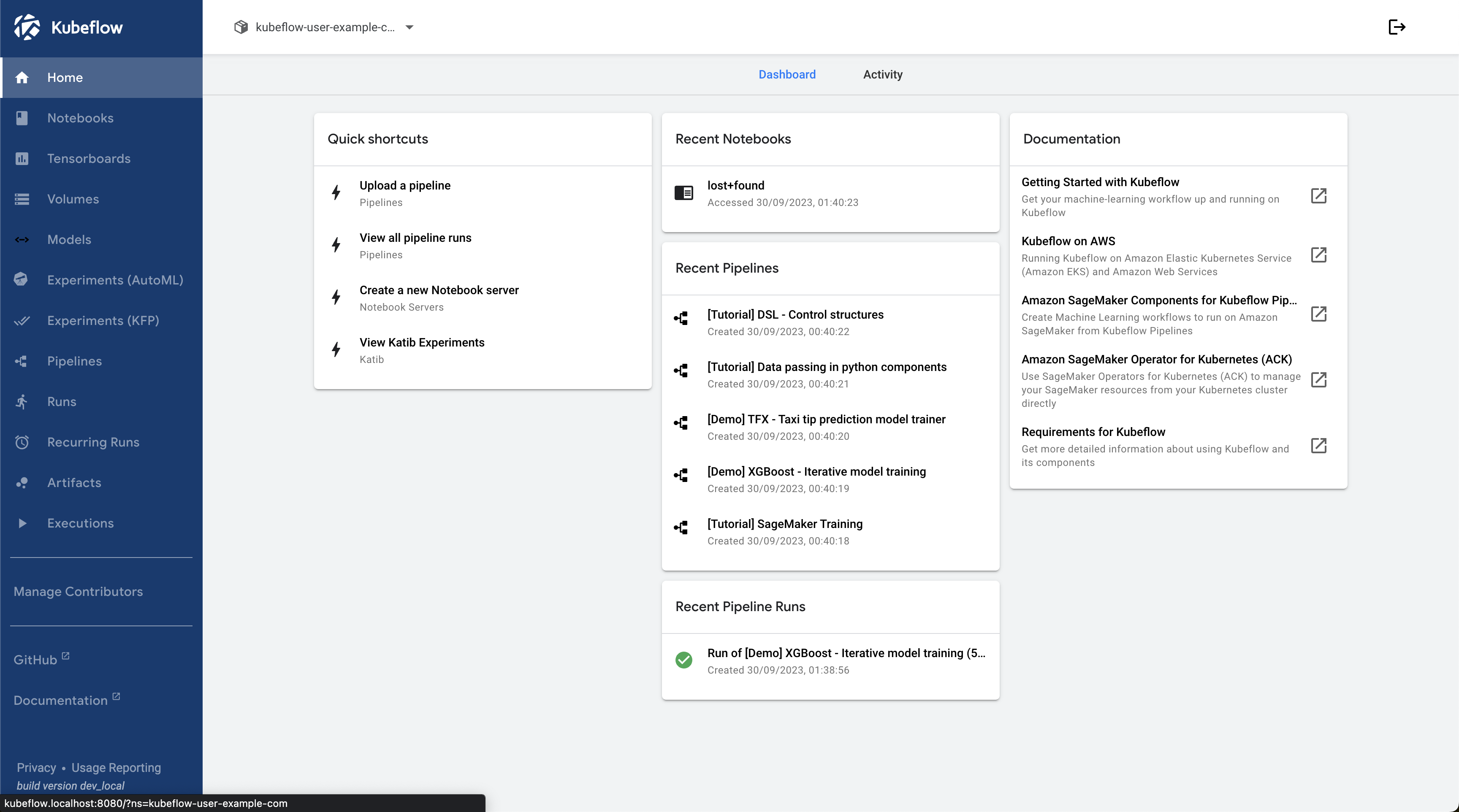

Central Dashboard

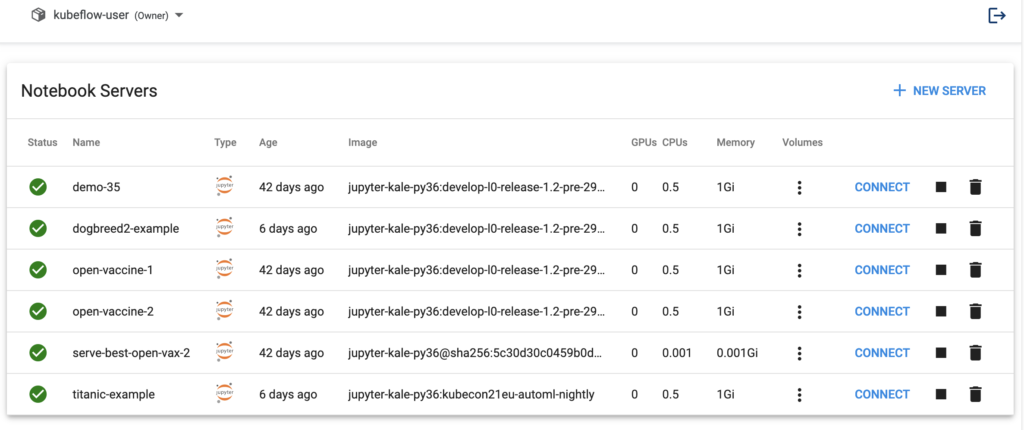

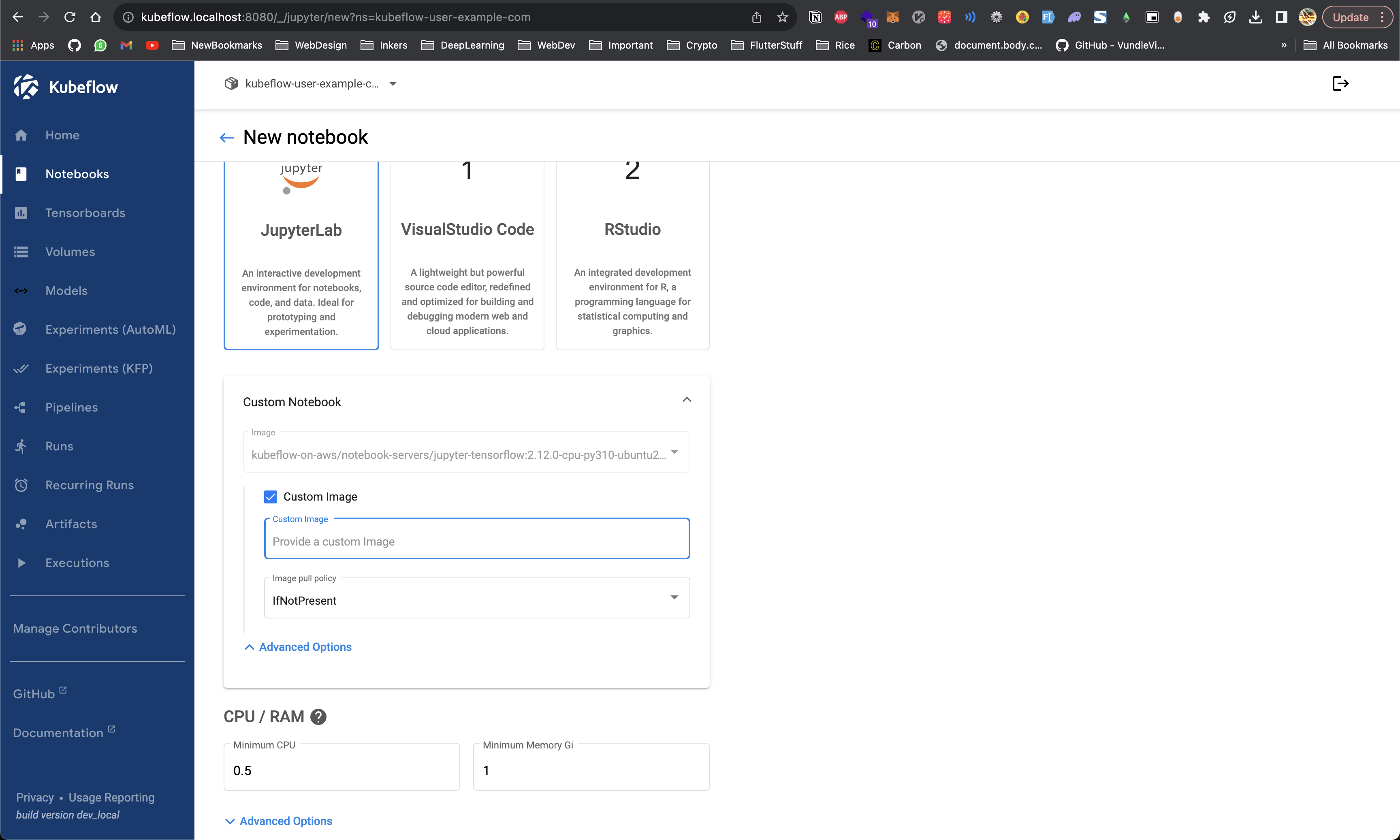

Kubeflow Notebooks

Building custom images for Kubeflow Notebooks: https://github.com/kubeflow/kubeflow/tree/master/components/example-notebook-servers#custom-images

You can always refer the Kubeflow’s official Images and create similar custom ones https://github.com/kubeflow/kubeflow/blob/master/components/example-notebook-servers/jupyter-pytorch/Makefile

Kubeflow Training Operators

https://www.kubeflow.org/docs/components/training/pytorch/

PyTorchJob is a Kubernetes custom resource to run PyTorch training jobs on Kubernetes. The Kubeflow implementation of PyTorchJob is in [training-operator](https://github.com/kubeflow/training-operator).

apiVersion: "kubeflow.org/v1"

kind: PyTorchJob

metadata:

name: pytorch-simple

namespace: kubeflow

spec:

pytorchReplicaSpecs:

Master:

replicas: 1

restartPolicy: OnFailure

template:

spec:

containers:

- name: pytorch

image: docker.io/kubeflowkatib/pytorch-mnist:v1beta1-45c5727

imagePullPolicy: Always

command:

- "python3"

- "/opt/pytorch-mnist/mnist.py"

- "--epochs=1"

Worker:

replicas: 1

restartPolicy: OnFailure

template:

spec:

containers:

- name: pytorch

image: docker.io/kubeflowkatib/pytorch-mnist:v1beta1-45c5727

imagePullPolicy: Always

command:

- "python3"

- "/opt/pytorch-mnist/mnist.py"

- "--epochs=1"Kubeflow Pipelines

https://www.kubeflow.org/docs/components/pipelines/v1/introduction/

A pipeline in Kubeflow Pipelines is like a recipe for machine learning. It's a detailed plan that describes the steps involved in an ML workflow and how they fit together, much like the steps in a cooking recipe. Each step is called a "component," and these components are like the individual tasks in a recipe, such as chopping vegetables or simmering a sauce.

The Kubeflow Pipelines platform consists of:

- A user interface (UI) for managing and tracking experiments, jobs, and runs.

- An engine for scheduling multi-step ML workflows.

- An SDK for defining and manipulating pipelines and components.

- Notebooks for interacting with the system using the SDK.

Key Points:

- Workflow Description: A pipeline is a way to organize and define the various stages of a machine learning project, including data preparation, model training, and deployment.

- Components: Each part of the pipeline is called a "component," which is a self-contained unit that performs a specific task, like data preprocessing or model training.

- Graph Structure: The pipeline is represented as a graph, showing how components are connected and how data flows between them. This visual representation helps you understand the sequence of tasks.

- Inputs and Outputs: The pipeline also defines what data or information each component needs as input and what it produces as output. This ensures that the different parts of the workflow can work together seamlessly.

- Reusability: You can think of components as reusable building blocks. Once you create a component for a specific task, you can use it in different pipelines without having to recreate it every time.

- Sharing: After creating a pipeline, you can share it with others using the Kubeflow Pipelines user interface, just like sharing a recipe with friends.

Kubeflow Pipeline SDK

https://kubeflow-pipelines.readthedocs.io/en/sdk-2.0.1/

https://kubeflow-pipelines.readthedocs.io/en/1.8.20/

Kubeflow Vanilla Installation on EKS

Create a EKS Cluster with 5 x t3a.medium nodes

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

iam:

withOIDC: true

metadata:

name: basic-cluster

region: ap-south-1

version: "1.25"

managedNodeGroups:

- name: ng-dedicated-1

instanceType: t3a.medium

desiredCapacity: 1

ssh:

allow: true # will use ~/.ssh/id_rsa.pub as the default ssh key

iam:

withAddonPolicies:

autoScaler: true

awsLoadBalancerController: true

certManager: true

externalDNS: true

ebs: true

efs: true

cloudWatch: true

- name: ng-spot-1

instanceType: t3a.medium

desiredCapacity: 5

ssh:

allow: true # will use ~/.ssh/id_rsa.pub as the default ssh key

spot: true

labels:

role: spot

propagateASGTags: true

iam:

withAddonPolicies:

autoScaler: true

awsLoadBalancerController: true

certManager: true

externalDNS: true

ebs: true

efs: true

cloudWatch: true

# - name: ng-dedicated-model

# instanceType: t3a.2xlarge

# desiredCapacity: 1

# ssh:

# allow: true # will use ~/.ssh/id_rsa.pub as the default ssh key

# iam:

# withAddonPolicies:

# autoScaler: true

# ebs: true

# efs: true

# awsLoadBalancerController: trueUse GitPod or any Linux based System, as the Installation scripts will only work on Ubuntu

Install Python3.8

sudo add-apt-repository ppa:deadsnakes/ppa -ysudo apt-get install python3.8 python3.8-distutils

Install AWS CLI and AWS IAM Authenticator

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/installcurl -Lo aws-iam-authenticator https://github.com/kubernetes-sigs/aws-iam-authenticator/releases/download/v0.5.9/aws-iam-authenticator_0.5.9_linux_amd64

chmod +x ./aws-iam-authenticator

sudo cp ./aws-iam-authenticator /usr/local/binConfigure AWS Credentials

aws configureUpdate Kube config using aws cli

aws eks update-kubeconfig --name basic-cluster --region ap-south-1Or Copy over the kube config to the instance

cat ~/.kube/configchmod go-r ~/.kube/configcat ~/.kube/configSet the CLUSTER_NAME and CLUSTER_REGION, this will later be used by Kubeflow Installation

export CLUSTER_NAME=basic-cluster

export CLUSTER_REGION=ap-south-1Make sure OIDC is enabled for your Cluster

eksctl utils associate-iam-oidc-provider --region ap-south-1 --cluster basic-cluster --approveInstall the EBS CSI Addon

First create the IRSA for EBS

eksctl create iamserviceaccount \

--name ebs-csi-controller-sa \

--namespace kube-system \

--cluster basic-cluster \

--role-name AmazonEKS_EBS_CSI_DriverRole \

--role-only \

--attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy \

--approveeksctl create addon --name aws-ebs-csi-driver --cluster basic-cluster --service-account-role-arn arn:aws:iam::006547668672:role/AmazonEKS_EBS_CSI_DriverRole --forceInstall Kubectl

https://kubernetes.io/docs/tasks/tools/install-kubectl-linux/

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectlMake sure kubectl is working

kubectl versionInstall Kubeflow

export CLUSTER_NAME=basic_cluster

export CLUSTER_REGION=ap-south-1make install-toolsInstall HELM version 3.12.2

This is mostly a temporary fix, Sagemaker’s helm chart only works on this specific version of HELM

install-helm:

wget https://get.helm.sh/helm-v3.12.2-linux-amd64.tar.gz

tar -zxvf helm-v3.12.2-linux-amd64.tar.gz

sudo mv linux-amd64/helm /usr/local/bin/helm

helm versionDeploy Kubeflow!

export KUBEFLOW_RELEASE_VERSION=v1.7.0

export AWS_RELEASE_VERSION=v1.7.0-aws-b1.0.3

git clone https://github.com/awslabs/kubeflow-manifests.git && cd kubeflow-manifests

git checkout ${AWS_RELEASE_VERSION}

git clone --branch ${KUBEFLOW_RELEASE_VERSION} https://github.com/kubeflow/manifests.git upstreamWe’re installing Kubeflow 1.7 which is the latest stable release of Kubeflow

https://www.kubeflow.org/docs/releases/kubeflow-1.7/

This will be the most time consuming step, patience!

ETA: ~15 mins

make deploy-kubeflow INSTALLATION_OPTION=helm DEPLOYMENT_OPTION=vanillak get all -A❯ k get pvc -A

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

istio-system authservice-pvc Bound pvc-b3ae1488-e1f0-4eec-8f0d-7b69122cb302 10Gi RWO gp2 12mAfter everything installed this should be the output of k get all -A

❯ k get all -A

NAMESPACE NAME READY STATUS RESTARTS AGE

ack-system pod/ack-sagemaker-controller-sagemaker-chart-8588ffbb6-w6bsx 0/1 CrashLoopBackOff 1 (5s ago) 20s

auth pod/dex-56d9748f89-nq6jg 1/1 Running 0 59m

cert-manager pod/cert-manager-5958fd9d8d-bjhb8 1/1 Running 0 60m

cert-manager pod/cert-manager-cainjector-7999df5dbc-ftsmn 1/1 Running 0 60m

cert-manager pod/cert-manager-webhook-7f8f79d49c-x2sxg 1/1 Running 0 60m

istio-system pod/authservice-0 1/1 Running 0 58m

istio-system pod/cluster-local-gateway-6955b67f54-5jsh7 1/1 Running 0 58m

istio-system pod/istio-ingressgateway-67f7b5f88d-wv4jg 1/1 Running 0 59m

istio-system pod/istiod-56f7cf9bd6-76vrw 1/1 Running 0 59m

knative-eventing pod/eventing-controller-c6f5fd6cd-4ssbd 1/1 Running 0 56m

knative-eventing pod/eventing-webhook-79cd6767-vcxwf 1/1 Running 0 56m

knative-serving pod/activator-67849589d6-kqdkh 2/2 Running 0 57m

knative-serving pod/autoscaler-6dbcdd95c7-cgcq4 2/2 Running 0 57m

knative-serving pod/controller-b9b8855b8-nzvc5 2/2 Running 0 57m

knative-serving pod/domain-mapping-75cc6d667f-f4h7m 2/2 Running 0 57m

knative-serving pod/domainmapping-webhook-6dfb78c944-pgn8d 2/2 Running 0 57m

knative-serving pod/net-istio-controller-5fcd96d76f-vb69s 2/2 Running 0 57m

knative-serving pod/net-istio-webhook-7ff9fdf999-tsw8j 2/2 Running 0 57m

knative-serving pod/webhook-69cc5b9849-rzj7v 2/2 Running 0 57m

kube-system pod/aws-node-6z6hw 1/1 Running 0 83m

kube-system pod/aws-node-bnbml 1/1 Running 0 42m

kube-system pod/aws-node-dhhbg 1/1 Running 0 83m

kube-system pod/aws-node-flbch 1/1 Running 0 82m

kube-system pod/aws-node-x56rv 1/1 Running 0 42m

kube-system pod/coredns-6db85c8f99-cjsmj 1/1 Running 0 89m

kube-system pod/coredns-6db85c8f99-pchw4 1/1 Running 0 89m

kube-system pod/ebs-csi-controller-5644545854-26klr 6/6 Running 0 73m

kube-system pod/ebs-csi-controller-5644545854-ph7fb 6/6 Running 0 73m

kube-system pod/ebs-csi-node-4fl42 3/3 Running 0 42m

kube-system pod/ebs-csi-node-57d88 3/3 Running 0 42m

kube-system pod/ebs-csi-node-flhdh 3/3 Running 0 73m

kube-system pod/ebs-csi-node-m9krs 3/3 Running 0 73m

kube-system pod/ebs-csi-node-mf8nr 3/3 Running 0 73m

kube-system pod/kube-proxy-4brft 1/1 Running 0 83m

kube-system pod/kube-proxy-hcnmq 1/1 Running 0 82m

kube-system pod/kube-proxy-m57wp 1/1 Running 0 42m

kube-system pod/kube-proxy-q4sj2 1/1 Running 0 83m

kube-system pod/kube-proxy-vp9g4 1/1 Running 0 42m

kube-system pod/metrics-server-5b4fc487-pv442 1/1 Running 0 33m

kubeflow-user-example-com pod/ml-pipeline-ui-artifact-6cb7b9f6fd-5r44w 2/2 Running 0 36m

kubeflow-user-example-com pod/ml-pipeline-visualizationserver-7b5889796d-6mxm6 2/2 Running 0 36m

kubeflow pod/admission-webhook-deployment-6db8bdbb45-w46rx 1/1 Running 0 51m

kubeflow pod/cache-server-76cb8f97f9-p8v2r 2/2 Running 0 53m

kubeflow pod/centraldashboard-655c7d894c-6jw4j 2/2 Running 0 54m

kubeflow pod/jupyter-web-app-deployment-76fbf48ff6-2snmx 2/2 Running 0 50m

kubeflow pod/katib-controller-8bb4fdf4f-4wxnh 1/1 Running 0 39m

kubeflow pod/katib-db-manager-f8dc7f465-2rc5t 1/1 Running 0 39m

kubeflow pod/katib-mysql-db6dc68c-wts27 1/1 Running 0 39m

kubeflow pod/katib-ui-7859bc4c67-7lrzr 2/2 Running 2 (39m ago) 39m

kubeflow pod/kserve-controller-manager-85b6b6c47d-rhjlz 2/2 Running 0 55m

kubeflow pod/kserve-models-web-app-8875bbdf8-w4wjk 2/2 Running 0 54m

kubeflow pod/kubeflow-pipelines-profile-controller-59ccbd47b9-cf2np 1/1 Running 0 53m

kubeflow pod/metacontroller-0 1/1 Running 0 53m

kubeflow pod/metadata-envoy-deployment-5b6c575b98-47hr9 1/1 Running 0 53m

kubeflow pod/metadata-grpc-deployment-784b8b5fb4-fzlck 2/2 Running 4 (51m ago) 53m

kubeflow pod/metadata-writer-5899c74595-bmcpn 2/2 Running 0 53m

kubeflow pod/minio-65dff76b66-r8vjw 2/2 Running 0 53m

kubeflow pod/ml-pipeline-cff8bdfff-fc9sg 2/2 Running 1 (51m ago) 53m

kubeflow pod/ml-pipeline-persistenceagent-798dbf666f-flbbr 2/2 Running 0 53m

kubeflow pod/ml-pipeline-scheduledworkflow-859ff9cf7b-nqdv7 2/2 Running 0 53m

kubeflow pod/ml-pipeline-ui-6d69549787-4td2t 2/2 Running 0 53m

kubeflow pod/ml-pipeline-viewer-crd-56f7cfd7d9-cg4nq 2/2 Running 1 (53m ago) 53m

kubeflow pod/ml-pipeline-visualizationserver-64447ffc76-g27ln 2/2 Running 0 53m

kubeflow pod/mysql-c999c6c8-6kh42 2/2 Running 0 53m

kubeflow pod/notebook-controller-deployment-5c9bc58599-fz445 2/2 Running 2 (42m ago) 50m

kubeflow pod/profiles-deployment-786df9d89d-nh6sp 3/3 Running 2 (37m ago) 37m

kubeflow pod/tensorboard-controller-deployment-6664b8866f-w24bf 3/3 Running 2 (38m ago) 38m

kubeflow pod/tensorboards-web-app-deployment-5cb4666798-rwbg8 2/2 Running 0 39m

kubeflow pod/training-operator-7589458f95-s86qb 1/1 Running 0 40m

kubeflow pod/volumes-web-app-deployment-59cf57d887-kbnh4 2/2 Running 0 41m

kubeflow pod/workflow-controller-6547f784cd-k9df9 2/2 Running 1 (53m ago) 53m

kubernetes-dashboard pod/kubernetes-dashboard-c6f5bfb9-59lcq 1/1 Running 0 32m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

auth service/dex ClusterIP 10.100.158.141 <none> 5556/TCP 59m

cert-manager service/cert-manager ClusterIP 10.100.225.57 <none> 9402/TCP 60m

cert-manager service/cert-manager-webhook ClusterIP 10.100.227.213 <none> 443/TCP 60m

default service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 90m

istio-system service/authservice ClusterIP 10.100.242.233 <none> 8080/TCP 58m

istio-system service/cluster-local-gateway ClusterIP 10.100.84.90 <none> 15020/TCP,80/TCP 58m

istio-system service/istio-ingressgateway ClusterIP 10.100.57.138 <none> 15021/TCP,80/TCP,443/TCP,31400/TCP,15443/TCP 59m

istio-system service/istiod ClusterIP 10.100.112.130 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 59m

istio-system service/knative-local-gateway ClusterIP 10.100.164.185 <none> 80/TCP 57m

knative-eventing service/eventing-webhook ClusterIP 10.100.84.238 <none> 443/TCP 56m

knative-serving service/activator-service ClusterIP 10.100.25.166 <none> 9090/TCP,8008/TCP,80/TCP,81/TCP,443/TCP 57m

knative-serving service/autoscaler ClusterIP 10.100.133.230 <none> 9090/TCP,8008/TCP,8080/TCP 57m

knative-serving service/autoscaler-bucket-00-of-01 ClusterIP 10.100.7.38 <none> 8080/TCP 56m

knative-serving service/controller ClusterIP 10.100.41.151 <none> 9090/TCP,8008/TCP 57m

knative-serving service/domainmapping-webhook ClusterIP 10.100.206.178 <none> 9090/TCP,8008/TCP,443/TCP 57m

knative-serving service/net-istio-webhook ClusterIP 10.100.51.249 <none> 9090/TCP,8008/TCP,443/TCP 57m

knative-serving service/webhook ClusterIP 10.100.54.81 <none> 9090/TCP,8008/TCP,443/TCP 57m

kube-system service/kube-dns ClusterIP 10.100.0.10 <none> 53/UDP,53/TCP 89m

kube-system service/metrics-server ClusterIP 10.100.118.238 <none> 443/TCP 33m

kubeflow-user-example-com service/ml-pipeline-ui-artifact ClusterIP 10.100.84.177 <none> 80/TCP 36m

kubeflow-user-example-com service/ml-pipeline-visualizationserver ClusterIP 10.100.189.195 <none> 8888/TCP 36m

kubeflow service/admission-webhook-service ClusterIP 10.100.95.2 <none> 443/TCP 51m

kubeflow service/cache-server ClusterIP 10.100.53.90 <none> 443/TCP 53m

kubeflow service/centraldashboard ClusterIP 10.100.155.30 <none> 80/TCP 54m

kubeflow service/jupyter-web-app-service ClusterIP 10.100.253.106 <none> 80/TCP 50m

kubeflow service/katib-controller ClusterIP 10.100.185.119 <none> 443/TCP,8080/TCP,18080/TCP 39m

kubeflow service/katib-db-manager ClusterIP 10.100.192.202 <none> 6789/TCP 39m

kubeflow service/katib-mysql ClusterIP 10.100.241.103 <none> 3306/TCP 39m

kubeflow service/katib-ui ClusterIP 10.100.181.25 <none> 80/TCP 39m

kubeflow service/kserve-controller-manager-metrics-service ClusterIP 10.100.110.165 <none> 8443/TCP 55m

kubeflow service/kserve-controller-manager-service ClusterIP 10.100.133.218 <none> 8443/TCP 55m

kubeflow service/kserve-models-web-app ClusterIP 10.100.104.0 <none> 80/TCP 54m

kubeflow service/kserve-webhook-server-service ClusterIP 10.100.151.215 <none> 443/TCP 55m

kubeflow service/kubeflow-pipelines-profile-controller ClusterIP 10.100.225.35 <none> 80/TCP 53m

kubeflow service/metadata-envoy-service ClusterIP 10.100.28.138 <none> 9090/TCP 53m

kubeflow service/metadata-grpc-service ClusterIP 10.100.23.118 <none> 8080/TCP 53m

kubeflow service/minio-service ClusterIP 10.100.145.220 <none> 9000/TCP 53m

kubeflow service/ml-pipeline ClusterIP 10.100.231.86 <none> 8888/TCP,8887/TCP 53m

kubeflow service/ml-pipeline-ui ClusterIP 10.100.144.69 <none> 80/TCP 53m

kubeflow service/ml-pipeline-visualizationserver ClusterIP 10.100.97.49 <none> 8888/TCP 53m

kubeflow service/mysql ClusterIP 10.100.185.41 <none> 3306/TCP 53m

kubeflow service/notebook-controller-service ClusterIP 10.100.173.193 <none> 443/TCP 50m

kubeflow service/profiles-kfam ClusterIP 10.100.214.203 <none> 8081/TCP 37m

kubeflow service/tensorboard-controller-controller-manager-metrics-service ClusterIP 10.100.41.34 <none> 8443/TCP 38m

kubeflow service/tensorboards-web-app-service ClusterIP 10.100.213.188 <none> 80/TCP 39m

kubeflow service/training-operator ClusterIP 10.100.200.255 <none> 8080/TCP 40m

kubeflow service/volumes-web-app-service ClusterIP 10.100.11.254 <none> 80/TCP 41m

kubeflow service/workflow-controller-metrics ClusterIP 10.100.230.114 <none> 9090/TCP 53m

kubernetes-dashboard service/kubernetes-dashboard ClusterIP 10.100.191.248 <none> 443/TCP 32m

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/aws-node 5 5 5 5 5 <none> 89m

kube-system daemonset.apps/ebs-csi-node 5 5 5 5 5 kubernetes.io/os=linux 73m

kube-system daemonset.apps/ebs-csi-node-windows 0 0 0 0 0 kubernetes.io/os=windows 73m

kube-system daemonset.apps/kube-proxy 5 5 5 5 5 <none> 89m

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

ack-system deployment.apps/ack-sagemaker-controller-sagemaker-chart 0/1 1 0 20s

auth deployment.apps/dex 1/1 1 1 59m

cert-manager deployment.apps/cert-manager 1/1 1 1 60m

cert-manager deployment.apps/cert-manager-cainjector 1/1 1 1 60m

cert-manager deployment.apps/cert-manager-webhook 1/1 1 1 60m

istio-system deployment.apps/cluster-local-gateway 1/1 1 1 58m

istio-system deployment.apps/istio-ingressgateway 1/1 1 1 59m

istio-system deployment.apps/istiod 1/1 1 1 59m

knative-eventing deployment.apps/eventing-controller 1/1 1 1 56m

knative-eventing deployment.apps/eventing-webhook 1/1 1 1 56m

knative-eventing deployment.apps/pingsource-mt-adapter 0/0 0 0 56m

knative-serving deployment.apps/activator 1/1 1 1 57m

knative-serving deployment.apps/autoscaler 1/1 1 1 57m

knative-serving deployment.apps/controller 1/1 1 1 57m

knative-serving deployment.apps/domain-mapping 1/1 1 1 57m

knative-serving deployment.apps/domainmapping-webhook 1/1 1 1 57m

knative-serving deployment.apps/net-istio-controller 1/1 1 1 57m

knative-serving deployment.apps/net-istio-webhook 1/1 1 1 57m

knative-serving deployment.apps/webhook 1/1 1 1 57m

kube-system deployment.apps/coredns 2/2 2 2 89m

kube-system deployment.apps/ebs-csi-controller 2/2 2 2 73m

kube-system deployment.apps/metrics-server 1/1 1 1 33m

kubeflow-user-example-com deployment.apps/ml-pipeline-ui-artifact 1/1 1 1 36m

kubeflow-user-example-com deployment.apps/ml-pipeline-visualizationserver 1/1 1 1 36m

kubeflow deployment.apps/admission-webhook-deployment 1/1 1 1 51m

kubeflow deployment.apps/cache-server 1/1 1 1 53m

kubeflow deployment.apps/centraldashboard 1/1 1 1 54m

kubeflow deployment.apps/jupyter-web-app-deployment 1/1 1 1 50m

kubeflow deployment.apps/katib-controller 1/1 1 1 39m

kubeflow deployment.apps/katib-db-manager 1/1 1 1 39m

kubeflow deployment.apps/katib-mysql 1/1 1 1 39m

kubeflow deployment.apps/katib-ui 1/1 1 1 39m

kubeflow deployment.apps/kserve-controller-manager 1/1 1 1 55m

kubeflow deployment.apps/kserve-models-web-app 1/1 1 1 54m

kubeflow deployment.apps/kubeflow-pipelines-profile-controller 1/1 1 1 53m

kubeflow deployment.apps/metadata-envoy-deployment 1/1 1 1 53m

kubeflow deployment.apps/metadata-grpc-deployment 1/1 1 1 53m

kubeflow deployment.apps/metadata-writer 1/1 1 1 53m

kubeflow deployment.apps/minio 1/1 1 1 53m

kubeflow deployment.apps/ml-pipeline 1/1 1 1 53m

kubeflow deployment.apps/ml-pipeline-persistenceagent 1/1 1 1 53m

kubeflow deployment.apps/ml-pipeline-scheduledworkflow 1/1 1 1 53m

kubeflow deployment.apps/ml-pipeline-ui 1/1 1 1 53m

kubeflow deployment.apps/ml-pipeline-viewer-crd 1/1 1 1 53m

kubeflow deployment.apps/ml-pipeline-visualizationserver 1/1 1 1 53m

kubeflow deployment.apps/mysql 1/1 1 1 53m

kubeflow deployment.apps/notebook-controller-deployment 1/1 1 1 50m

kubeflow deployment.apps/profiles-deployment 1/1 1 1 37m

kubeflow deployment.apps/tensorboard-controller-deployment 1/1 1 1 38m

kubeflow deployment.apps/tensorboards-web-app-deployment 1/1 1 1 39m

kubeflow deployment.apps/training-operator 1/1 1 1 40m

kubeflow deployment.apps/volumes-web-app-deployment 1/1 1 1 41m

kubeflow deployment.apps/workflow-controller 1/1 1 1 53m

kubernetes-dashboard deployment.apps/kubernetes-dashboard 1/1 1 1 32m

NAMESPACE NAME DESIRED CURRENT READY AGE

ack-system replicaset.apps/ack-sagemaker-controller-sagemaker-chart-8588ffbb6 1 1 0 20s

auth replicaset.apps/dex-56d9748f89 1 1 1 59m

cert-manager replicaset.apps/cert-manager-5958fd9d8d 1 1 1 60m

cert-manager replicaset.apps/cert-manager-cainjector-7999df5dbc 1 1 1 60m

cert-manager replicaset.apps/cert-manager-webhook-7f8f79d49c 1 1 1 60m

istio-system replicaset.apps/cluster-local-gateway-6955b67f54 1 1 1 58m

istio-system replicaset.apps/istio-ingressgateway-67f7b5f88d 1 1 1 59m

istio-system replicaset.apps/istiod-56f7cf9bd6 1 1 1 59m

knative-eventing replicaset.apps/eventing-controller-c6f5fd6cd 1 1 1 56m

knative-eventing replicaset.apps/eventing-webhook-79cd6767 1 1 1 56m

knative-eventing replicaset.apps/pingsource-mt-adapter-856fb9576b 0 0 0 56m

knative-serving replicaset.apps/activator-67849589d6 1 1 1 57m

knative-serving replicaset.apps/autoscaler-6dbcdd95c7 1 1 1 57m

knative-serving replicaset.apps/controller-b9b8855b8 1 1 1 57m

knative-serving replicaset.apps/domain-mapping-75cc6d667f 1 1 1 57m

knative-serving replicaset.apps/domainmapping-webhook-6dfb78c944 1 1 1 57m

knative-serving replicaset.apps/net-istio-controller-5fcd96d76f 1 1 1 57m

knative-serving replicaset.apps/net-istio-webhook-7ff9fdf999 1 1 1 57m

knative-serving replicaset.apps/webhook-69cc5b9849 1 1 1 57m

kube-system replicaset.apps/coredns-6db85c8f99 2 2 2 89m

kube-system replicaset.apps/ebs-csi-controller-5644545854 2 2 2 73m

kube-system replicaset.apps/metrics-server-5b4fc487 1 1 1 33m

kubeflow-user-example-com replicaset.apps/ml-pipeline-ui-artifact-6cb7b9f6fd 1 1 1 36m

kubeflow-user-example-com replicaset.apps/ml-pipeline-visualizationserver-7b5889796d 1 1 1 36m

kubeflow replicaset.apps/admission-webhook-deployment-6db8bdbb45 1 1 1 51m

kubeflow replicaset.apps/cache-server-76cb8f97f9 1 1 1 53m

kubeflow replicaset.apps/centraldashboard-655c7d894c 1 1 1 54m

kubeflow replicaset.apps/jupyter-web-app-deployment-76fbf48ff6 1 1 1 50m

kubeflow replicaset.apps/katib-controller-8bb4fdf4f 1 1 1 39m

kubeflow replicaset.apps/katib-db-manager-f8dc7f465 1 1 1 39m

kubeflow replicaset.apps/katib-mysql-db6dc68c 1 1 1 39m

kubeflow replicaset.apps/katib-ui-7859bc4c67 1 1 1 39m

kubeflow replicaset.apps/kserve-controller-manager-85b6b6c47d 1 1 1 55m

kubeflow replicaset.apps/kserve-models-web-app-8875bbdf8 1 1 1 54m

kubeflow replicaset.apps/kubeflow-pipelines-profile-controller-59ccbd47b9 1 1 1 53m

kubeflow replicaset.apps/metadata-envoy-deployment-5b6c575b98 1 1 1 53m

kubeflow replicaset.apps/metadata-grpc-deployment-784b8b5fb4 1 1 1 53m

kubeflow replicaset.apps/metadata-writer-5899c74595 1 1 1 53m

kubeflow replicaset.apps/minio-65dff76b66 1 1 1 53m

kubeflow replicaset.apps/ml-pipeline-cff8bdfff 1 1 1 53m

kubeflow replicaset.apps/ml-pipeline-persistenceagent-798dbf666f 1 1 1 53m

kubeflow replicaset.apps/ml-pipeline-scheduledworkflow-859ff9cf7b 1 1 1 53m

kubeflow replicaset.apps/ml-pipeline-ui-6d69549787 1 1 1 53m

kubeflow replicaset.apps/ml-pipeline-viewer-crd-56f7cfd7d9 1 1 1 53m

kubeflow replicaset.apps/ml-pipeline-visualizationserver-64447ffc76 1 1 1 53m

kubeflow replicaset.apps/mysql-c999c6c8 1 1 1 53m

kubeflow replicaset.apps/notebook-controller-deployment-5c9bc58599 1 1 1 50m

kubeflow replicaset.apps/profiles-deployment-786df9d89d 1 1 1 37m

kubeflow replicaset.apps/tensorboard-controller-deployment-6664b8866f 1 1 1 38m

kubeflow replicaset.apps/tensorboards-web-app-deployment-5cb4666798 1 1 1 39m

kubeflow replicaset.apps/training-operator-7589458f95 1 1 1 40m

kubeflow replicaset.apps/volumes-web-app-deployment-59cf57d887 1 1 1 41m

kubeflow replicaset.apps/workflow-controller-6547f784cd 1 1 1 53m

kubernetes-dashboard replicaset.apps/kubernetes-dashboard-c6f5bfb9 1 1 1 32m

NAMESPACE NAME READY AGE

istio-system statefulset.apps/authservice 1/1 58m

kubeflow statefulset.apps/metacontroller 1/1 53m

NAMESPACE NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

knative-eventing horizontalpodautoscaler.autoscaling/eventing-webhook Deployment/eventing-webhook 10%/100% 1 5 1 56m

knative-serving horizontalpodautoscaler.autoscaling/activator Deployment/activator 1%/100% 1 20 1 57m

knative-serving horizontalpodautoscaler.autoscaling/webhook Deployment/webhook 10%/100% 1 5 1 57m

NAMESPACE NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

kubeflow cronjob.batch/aws-kubeflow-telemetry 0 0 * * * False 0 <none> 12sWe don’t need the GitPod / Ubuntu Docker Container anymore!

Access Kubeflow UI

make port-forwardOR

kubectl port-forward svc/istio-ingressgateway -n istio-system 8080:80And Open kubeflow.localhost:8080

- email : user@example.com

- password : 12341234

Kubeflow Dashboard

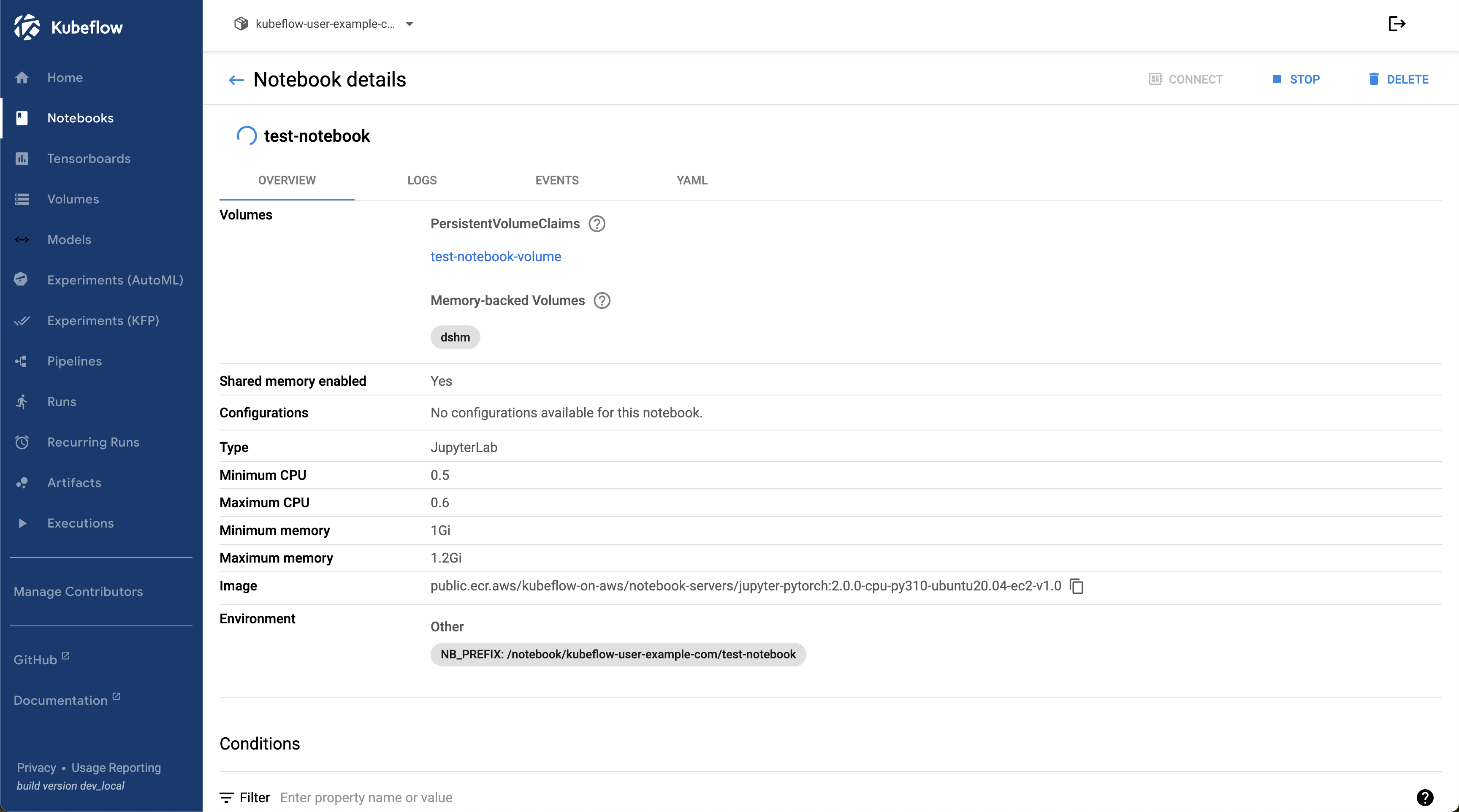

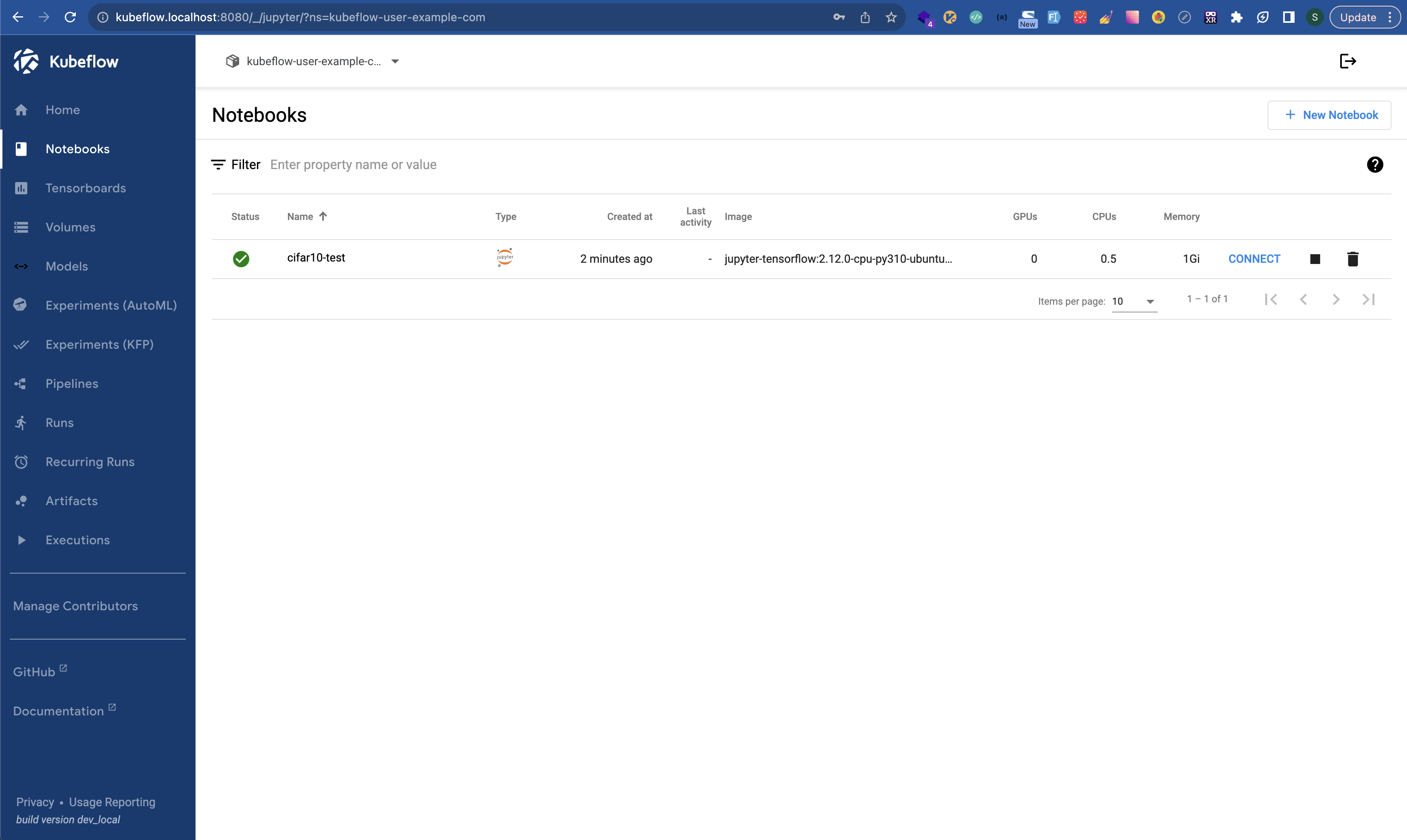

Notebooks

❯ k get all -n kubeflow-user-example-com

NAME READY STATUS RESTARTS AGE

pod/ml-pipeline-ui-artifact-6cb7b9f6fd-5r44w 2/2 Running 0 56m

pod/ml-pipeline-visualizationserver-7b5889796d-6mxm6 2/2 Running 0 56m

pod/test-notebook-0 2/2 Running 0 11m

pod/train-until-good-pipeline-vjvtn-1093820330 0/2 Completed 0 10m

pod/train-until-good-pipeline-vjvtn-1559436543 0/2 Completed 0 11m

pod/train-until-good-pipeline-vjvtn-1585005674 0/2 Completed 0 4m35s

pod/train-until-good-pipeline-vjvtn-1867516346 0/2 Completed 0 8m34s

pod/train-until-good-pipeline-vjvtn-218128270 0/2 Completed 0 9m12s

pod/train-until-good-pipeline-vjvtn-2369866318 0/2 Completed 0 9m49s

pod/train-until-good-pipeline-vjvtn-2480910040 0/2 Completed 0 6m14s

pod/train-until-good-pipeline-vjvtn-2677974974 0/2 Completed 0 5m13s

pod/train-until-good-pipeline-vjvtn-2765339483 0/2 Completed 0 11m

pod/train-until-good-pipeline-vjvtn-3173442780 0/2 Completed 0 8m12s

pod/train-until-good-pipeline-vjvtn-330288830 0/2 Completed 0 5m52s

pod/train-until-good-pipeline-vjvtn-3536688595 0/2 Completed 0 12m

pod/train-until-good-pipeline-vjvtn-878983984 0/2 Completed 0 7m33s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ml-pipeline-ui-artifact ClusterIP 10.100.84.177 <none> 80/TCP 56m

service/ml-pipeline-visualizationserver ClusterIP 10.100.189.195 <none> 8888/TCP 56m

service/test-notebook ClusterIP 10.100.147.158 <none> 80/TCP 11m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ml-pipeline-ui-artifact 1/1 1 1 56m

deployment.apps/ml-pipeline-visualizationserver 1/1 1 1 56m

NAME DESIRED CURRENT READY AGE

replicaset.apps/ml-pipeline-ui-artifact-6cb7b9f6fd 1 1 1 56m

replicaset.apps/ml-pipeline-visualizationserver-7b5889796d 1 1 1 56m

NAME READY AGE

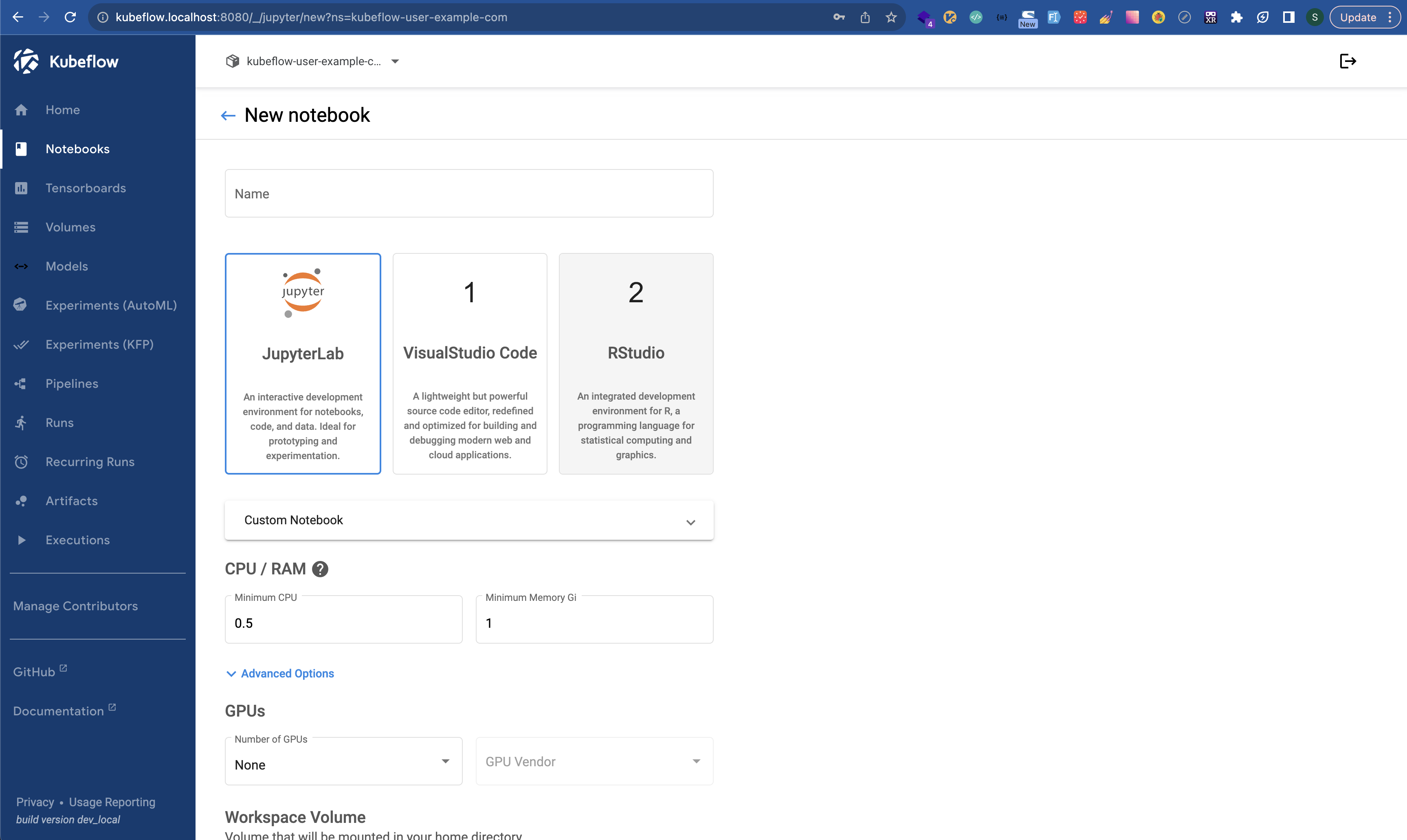

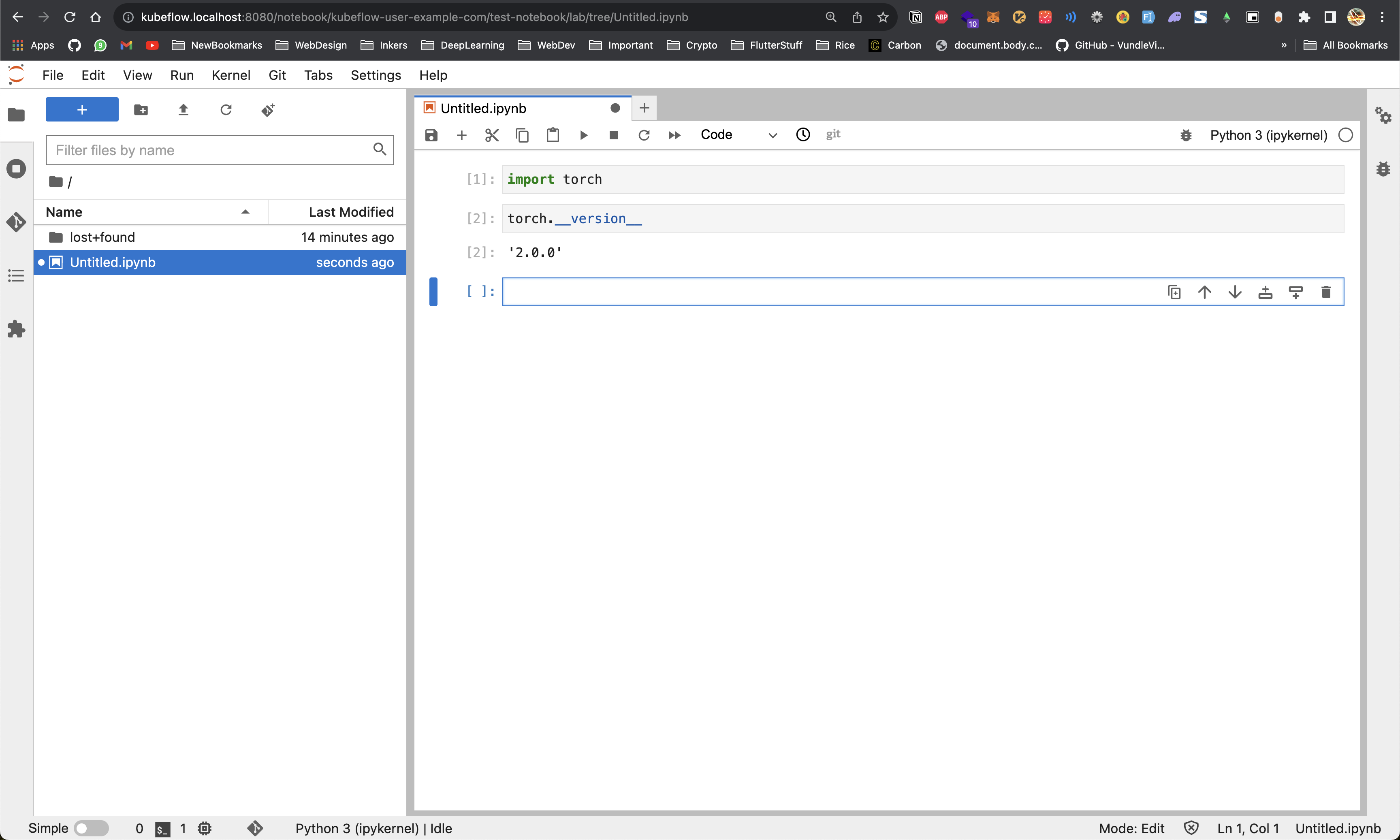

statefulset.apps/test-notebook 1/1 11mCreate a New Notebook

Set Image as public.ecr.aws/kubeflow-on-aws/notebook-servers/jupyter-pytorch:2.0.0-cpu-py310-ubuntu20.04-ec2-v1.0

You can see the resources being provisioned for our notebook

❯ k get all -n kubeflow-user-example-com

NAME READY STATUS RESTARTS AGE

pod/cifar10-test-0 0/2 PodInitializing 0 54s

pod/ml-pipeline-ui-artifact-6cb7b9f6fd-ls6nr 2/2 Running 0 4m26s

pod/ml-pipeline-visualizationserver-7b5889796d-tthgs 2/2 Running 0 4m26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cifar10-test ClusterIP 10.100.115.179 <none> 80/TCP 57s

service/ml-pipeline-ui-artifact ClusterIP 10.100.193.139 <none> 80/TCP 4m29s

service/ml-pipeline-visualizationserver ClusterIP 10.100.89.182 <none> 8888/TCP 4m29s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ml-pipeline-ui-artifact 1/1 1 1 4m29s

deployment.apps/ml-pipeline-visualizationserver 1/1 1 1 4m29s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ml-pipeline-ui-artifact-6cb7b9f6fd 1 1 1 4m29s

replicaset.apps/ml-pipeline-visualizationserver-7b5889796d 1 1 1 4m29s

NAME READY AGE

statefulset.apps/cifar10-test 0/1 57sA PVC is also created for the Notebook

❯ k get pvc -n kubeflow-user-example-com

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cifar10-test-volume Bound pvc-85510e89-fac2-49ac-8cc8-c60ad3c6596f 10Gi RWO gp2 107s

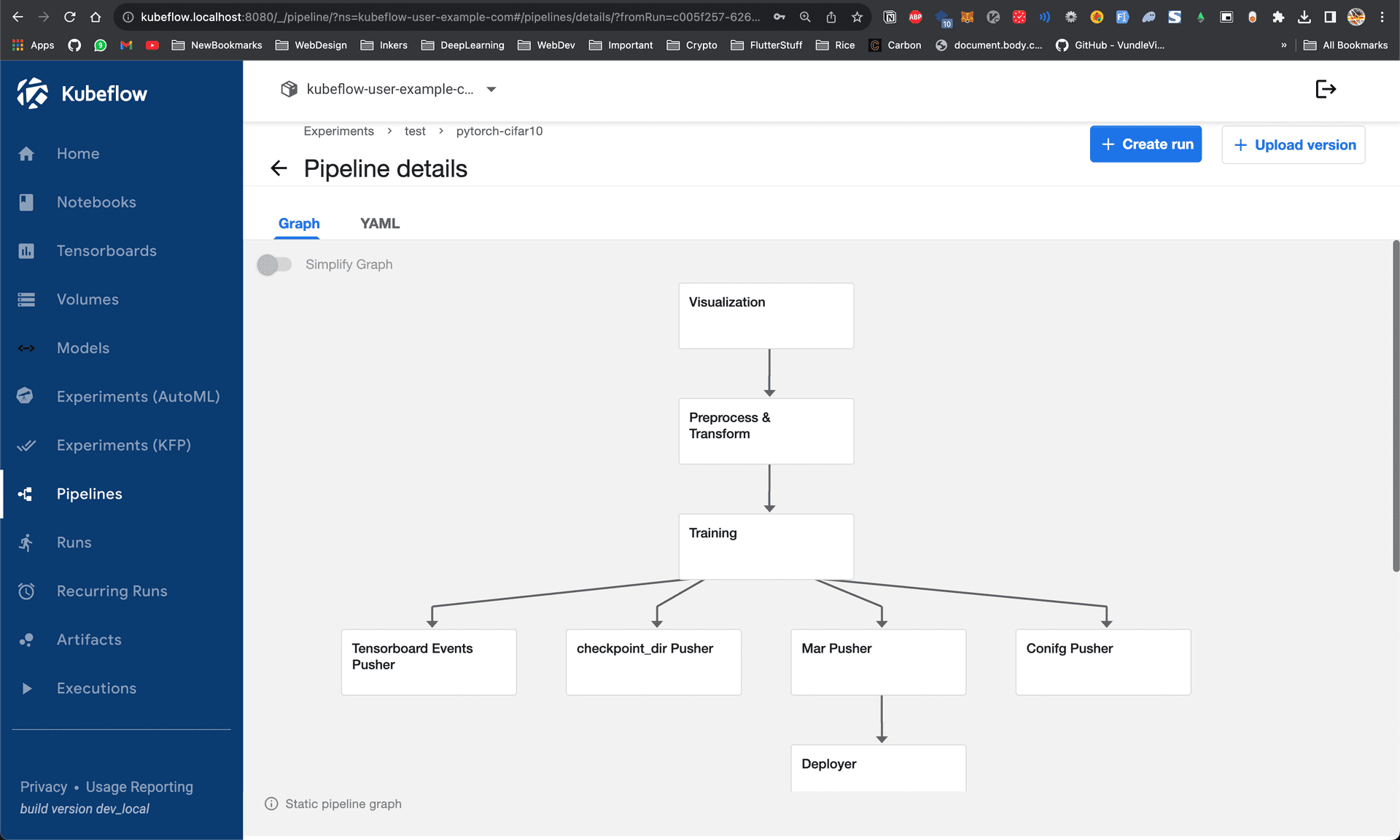

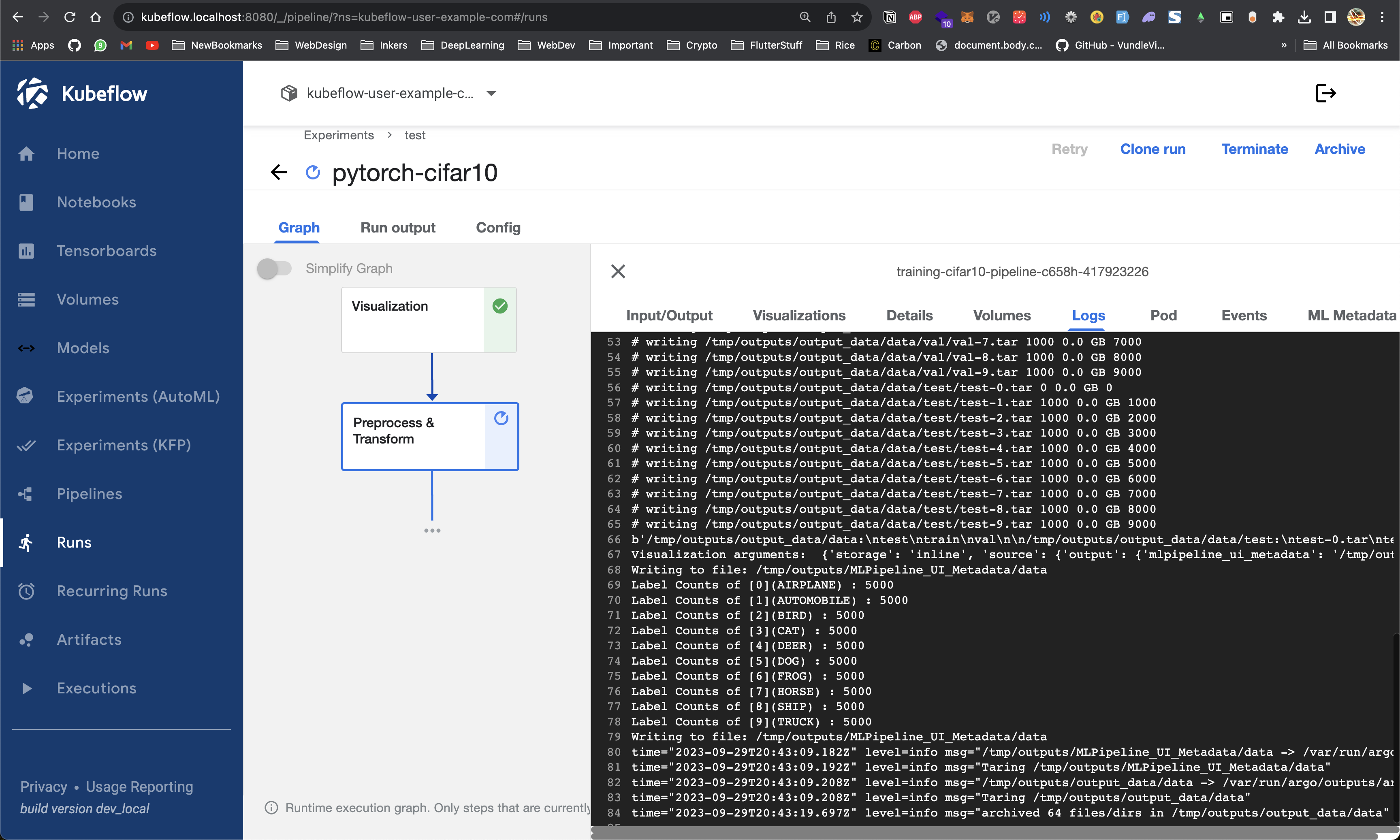

PyTorch CIFAR10 Example Pipeline

kubectl get secret mlpipeline-minio-artifact -n kubeflow -o jsonpath="{.data.accesskey}" | base64 -dkubectl get secret mlpipeline-minio-artifact -n kubeflow -o jsonpath="{.data.secretkey}" | base64 -dminio-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecret

annotations:

serving.kserve.io/s3-endpoint: minio-service.kubeflow:9000 # replace with your s3 endpoint

serving.kserve.io/s3-usehttps: "0" # by default 1, for testing with minio you need to set to 0

serving.kserve.io/s3-region: "minio" # replace with the region the bucket is created in

serving.kserve.io/s3-useanoncredential: "false" # omitting this is the same as false, if true will ignore credential provided and use anonymous credentials

type: Opaque

data:

AWS_ACCESS_KEY_ID: bWluaW8= # replace with your base64 encoded minio credential

AWS_SECRET_ACCESS_KEY: bWluaW8xMjM= # replace with your base64 encoded minio credential

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: sa

secrets:

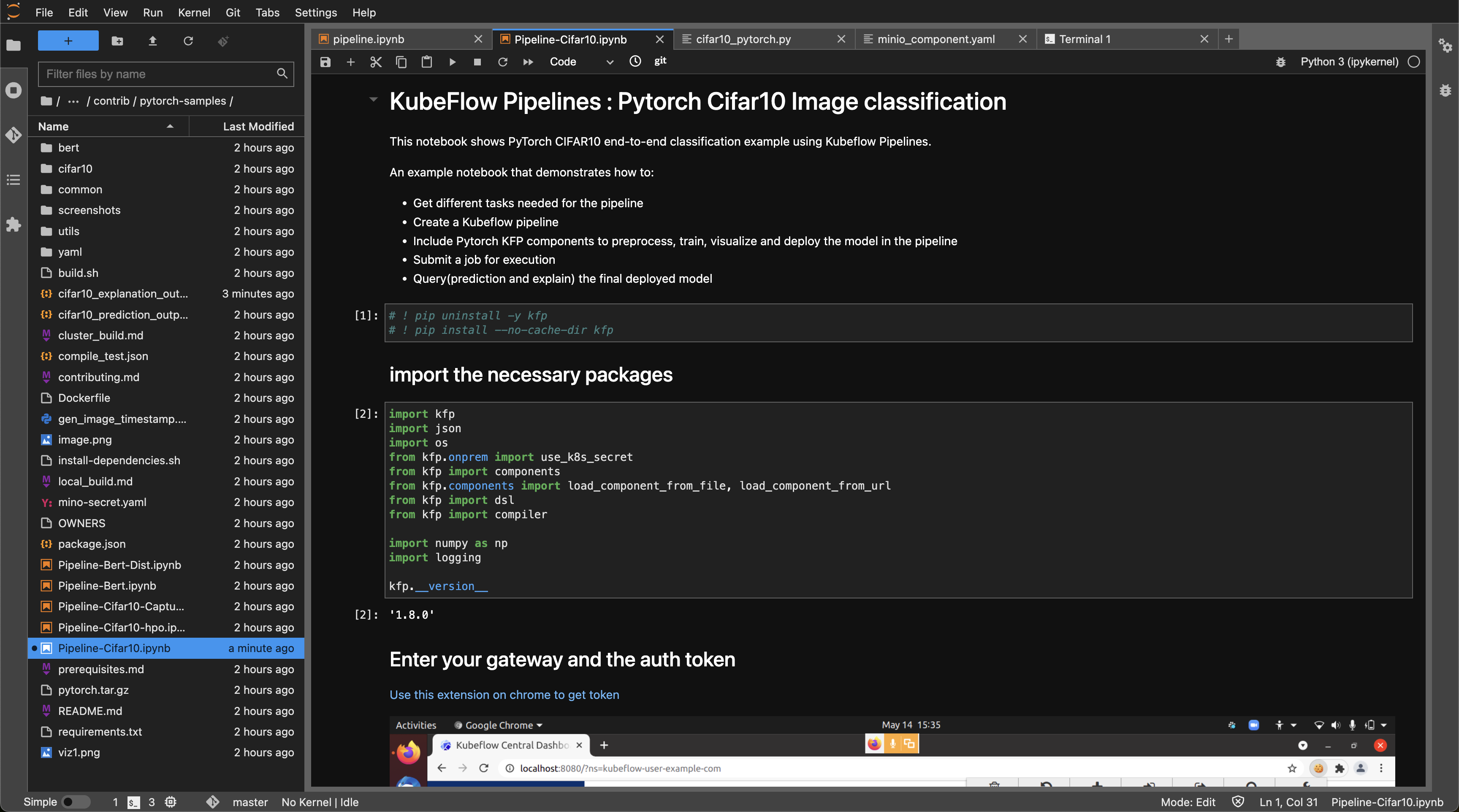

- name: mysecretk apply -f minio-secret.yaml -n kubeflow-user-example-com! git clone https://github.com/kubeflow/pipelinesOpen pipelines/samples/contrib/pytorch-samples/Pipeline-Cifar10.ipynb

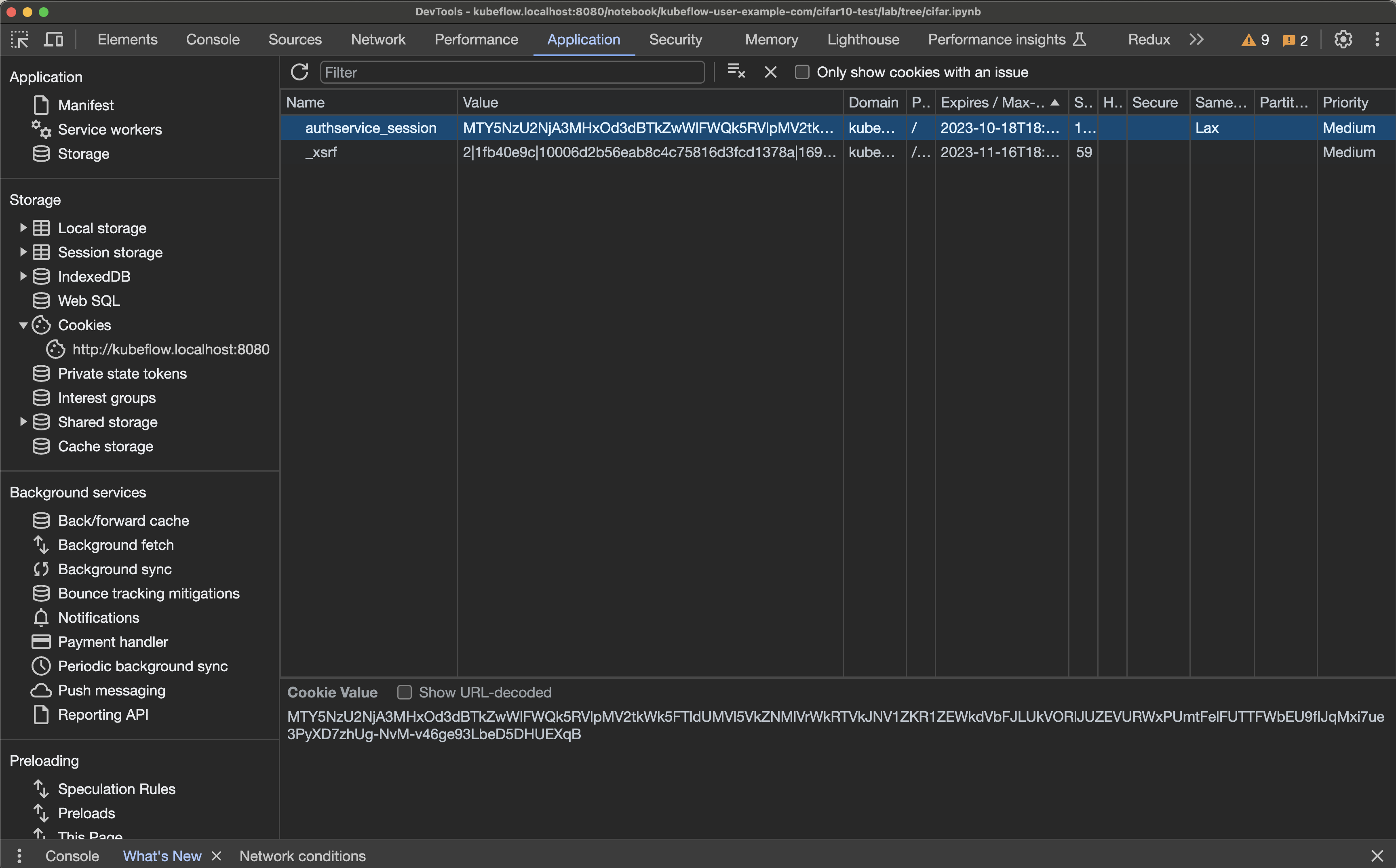

Add the AUTH from Cookies

INGRESS_GATEWAY='http://istio-ingressgateway.istio-system.svc.cluster.local'

AUTH="MTY5NjAxOTAxMXxOd3dBTkZaSFEwSTBSbFJHTTBOTVYxazFUVXBXVlZsWVVESkdWRFZFVVZKYVZVNWFRMHBZUVVaTVRraGFWMEkxUlVrek4weFhSMEU9fB2VxDBTpBnVfpHM6HWrqCSbeM-kxjVs7B_ODAnRCd42"

NAMESPACE="kubeflow-user-example-com"

COOKIE="authservice_session="+AUTH

EXPERIMENT="Default"Make sure that you don’t uninstall the existing kfp that comes preinstalled!

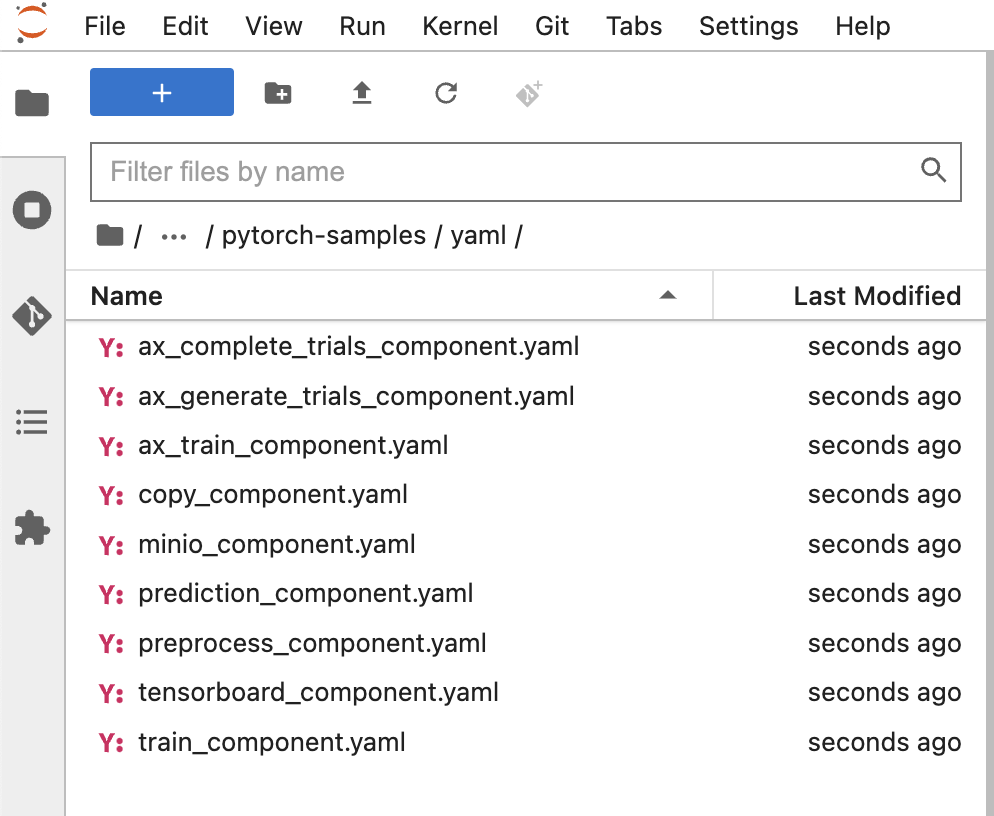

kfp=='1.8.20'! python utils/generate_templates.py cifar10/template_mapping.jsonThis will generate all the YAML config files needed to define the pipeline

We are not using all the yaml files

Go through the notebook

preprocess_component.yaml

description: 'Prepare data for PyTorch training.

'

implementation:

container:

args:

- --output_path

- outputPath: output_data

- --mlpipeline_ui_metadata

- outputPath: MLPipeline UI Metadata

command:

- python3

- cifar10/cifar10_pre_process.py

image: public.ecr.aws/pytorch-samples/kfp_samples:latest

name: PreProcessData

outputs:

- description: The path to the input datasets

name: output_data

- description: Path to generate MLPipeline UI Metadata

name: MLPipeline UI Metadatatrain_component.yaml

description: 'Pytorch training

'

implementation:

container:

args:

- --dataset_path

- inputPath: input_data

- --script_args

- inputValue: script_args

- --ptl_args

- inputValue: ptl_arguments

- --tensorboard_root

- outputPath: tensorboard_root

- --checkpoint_dir

- outputPath: checkpoint_dir

- --mlpipeline_ui_metadata

- outputPath: MLPipeline UI Metadata

- --mlpipeline_metrics

- outputPath: MLPipeline Metrics

command:

- python3

- cifar10/cifar10_pytorch.py

image: public.ecr.aws/pytorch-samples/kfp_samples:latest

inputs:

- description: Input dataset path

name: input_data

- description: Arguments to the model script

name: script_args

- description: Arguments to pytorch lightning Trainer

name: ptl_arguments

name: Training

outputs:

- description: Tensorboard output path

name: tensorboard_root

- description: Model checkpoint output

name: checkpoint_dir

- description: MLPipeline UI Metadata output

name: MLPipeline UI Metadata

- description: MLPipeline Metrics output

name: MLPipeline MetricsNotice how all the Pipeline Components are using public.ecr.aws/pytorch-samples/kfp_samples:latest

This is the Dockerfile used to create kfp_samples docker image

# Copyright (c) Facebook, Inc. and its affiliates.

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

ARG BASE_IMAGE=pytorch/pytorch:latest

FROM ${BASE_IMAGE}

COPY . .

RUN pip install -U pip

RUN pip install -U --no-cache-dir -r requirements.txt

RUN pip install pytorch-kfp-components

ENV PYTHONPATH /workspace

ENTRYPOINT /bin/bashNotice how badly requirements.txt is written

boto3

image

matplotlib

pyarrow

sklearn

transformers

torchdata

webdataset

pandas

s3fs

wget

torch-model-archiver

minio

kfp

tensorboard

torchmetrics

pytorch-lightning😅 Something will definitely go wrong later

But lets’ continue working on the notebook

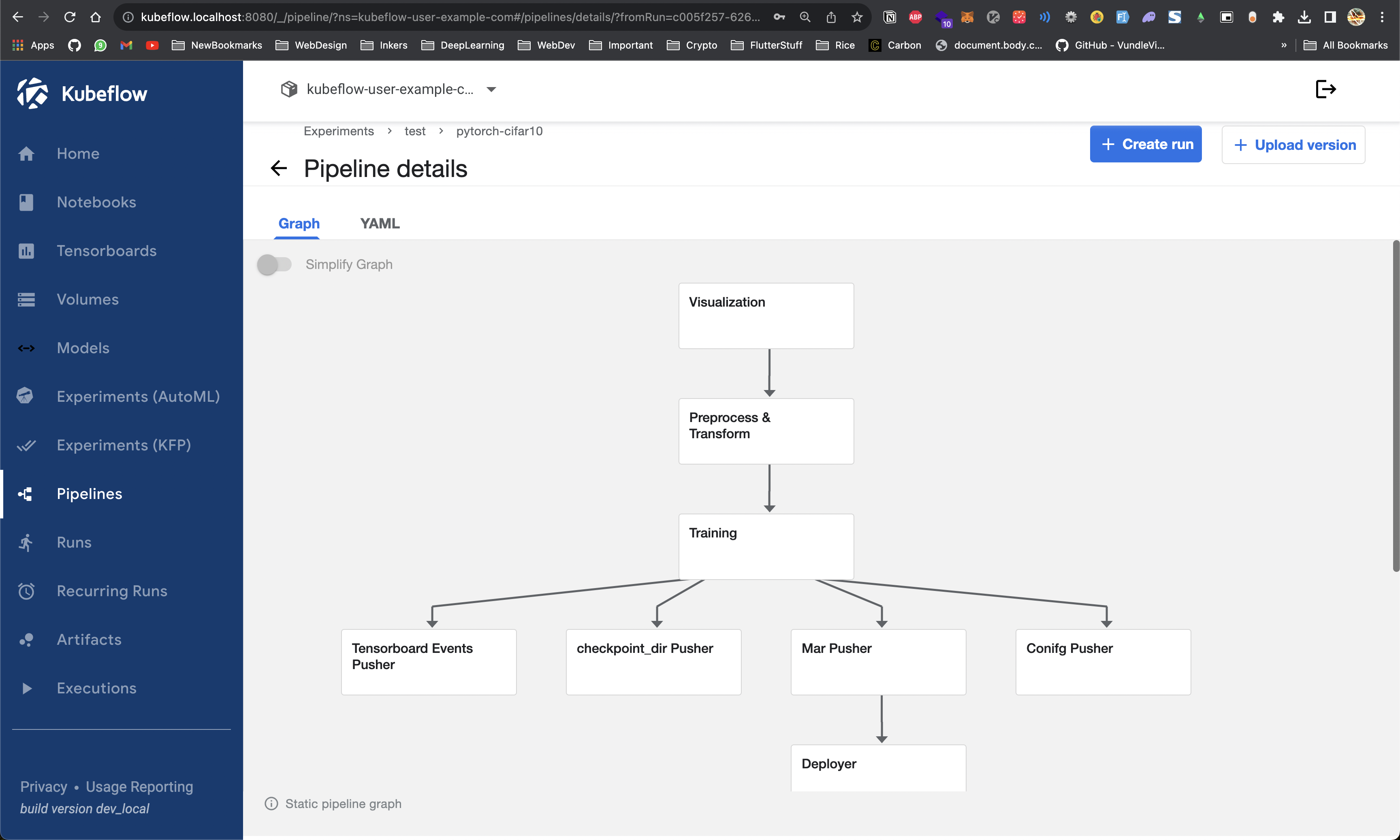

@dsl.pipeline(

name="Training Cifar10 pipeline 3", description="Cifar 10 dataset pipeline 3"

)

def pytorch_cifar10( # pylint: disable=too-many-arguments

minio_endpoint=MINIO_ENDPOINT,

log_bucket=LOG_BUCKET,

log_dir=f"tensorboard/logs/{dsl.RUN_ID_PLACEHOLDER}",

mar_path=f"mar/{dsl.RUN_ID_PLACEHOLDER}/model-store",

config_prop_path=f"mar/{dsl.RUN_ID_PLACEHOLDER}/config",

model_uri=f"s3://mlpipeline/mar/{dsl.RUN_ID_PLACEHOLDER}",

tf_image=TENSORBOARD_IMAGE,

deploy=DEPLOY_NAME,

isvc_name=ISVC_NAME,

model=MODEL_NAME,

namespace=NAMESPACE,

confusion_matrix_log_dir=f"confusion_matrix/{dsl.RUN_ID_PLACEHOLDER}/",

checkpoint_dir="checkpoint_dir/cifar10",

input_req=INPUT_REQUEST,

cookie=COOKIE,

ingress_gateway=INGRESS_GATEWAY,

):

def sleep_op(seconds):

"""Sleep for a while."""

return dsl.ContainerOp(

name="Sleep " + str(seconds) + " seconds",

image="python:alpine3.6",

command=["sh", "-c"],

arguments=[

'python -c "import time; time.sleep($0)"',

str(seconds)

],

)

"""This method defines the pipeline tasks and operations"""

pod_template_spec = json.dumps({

"spec": {

"containers": [{

"env": [

{

"name": "AWS_ACCESS_KEY_ID",

"valueFrom": {

"secretKeyRef": {

"name": "mlpipeline-minio-artifact",

"key": "accesskey",

}

},

},

{

"name": "AWS_SECRET_ACCESS_KEY",

"valueFrom": {

"secretKeyRef": {

"name": "mlpipeline-minio-artifact",

"key": "secretkey",

}

},

},

{

"name": "AWS_REGION",

"value": "minio"

},

{

"name": "S3_ENDPOINT",

"value": f"{minio_endpoint}",

},

{

"name": "S3_USE_HTTPS",

"value": "0"

},

{

"name": "S3_VERIFY_SSL",

"value": "0"

},

]

}]

}

})

prepare_tb_task = prepare_tensorboard_op(

log_dir_uri=f"s3://{log_bucket}/{log_dir}",

image=tf_image,

pod_template_spec=pod_template_spec,

).set_display_name("Visualization")

prep_task = (

prep_op().after(prepare_tb_task

).set_display_name("Preprocess & Transform")

)

confusion_matrix_url = f"minio://{log_bucket}/{confusion_matrix_log_dir}"

script_args = f"model_name=resnet.pth," \

f"confusion_matrix_url={confusion_matrix_url}"

# For GPU, set number of devices and strategy type

ptl_args = f"max_epochs=1, devices=0, strategy=None, profiler=pytorch, accelerator=auto"

train_task = (

train_op(

input_data=prep_task.outputs["output_data"],

script_args=script_args,

ptl_arguments=ptl_args

).after(prep_task).set_display_name("Training")

# For allocating resources, uncomment below lines

# .set_memory_request('600M')

# .set_memory_limit('1200M')

# .set_cpu_request('700m')

# .set_cpu_limit('1400m')

# For GPU uncomment below line and set GPU limit and node selector

# .set_gpu_limit(1).add_node_selector_constraint('cloud.google.com/gke-accelerator','nvidia-tesla-p4')

)

(

minio_op(

bucket_name="mlpipeline",

folder_name=log_dir,

input_path=train_task.outputs["tensorboard_root"],

filename="",

).after(train_task).set_display_name("Tensorboard Events Pusher")

)

(

minio_op(

bucket_name="mlpipeline",

folder_name=checkpoint_dir,

input_path=train_task.outputs["checkpoint_dir"],

filename="",

).after(train_task).set_display_name("checkpoint_dir Pusher")

)

minio_mar_upload = (

minio_op(

bucket_name="mlpipeline",

folder_name=mar_path,

input_path=train_task.outputs["checkpoint_dir"],

filename="cifar10_test.mar",

).after(train_task).set_display_name("Mar Pusher")

)

(

minio_op(

bucket_name="mlpipeline",

folder_name=config_prop_path,

input_path=train_task.outputs["checkpoint_dir"],

filename="config.properties",

).after(train_task).set_display_name("Conifg Pusher")

)

model_uri = str(model_uri)

# pylint: disable=unused-variable

isvc_yaml = """

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: {}

namespace: {}

spec:

predictor:

serviceAccountName: sa

pytorch:

protocolVersion: v2

storageUri: {}

resources:

requests:

cpu: 1

memory: 1Gi

limits:

cpu: 1

memory: 1Gi

""".format(deploy, namespace, model_uri)

# Update inferenceservice_yaml for GPU inference

deploy_task = (

deploy_op(action="apply", inferenceservice_yaml=isvc_yaml

).after(minio_mar_upload).set_display_name("Deployer")

)

# Wait here for model to be loaded in torchserve for inference

sleep_task = sleep_op(60).after(deploy_task).set_display_name("Sleep")

# Make Inference request

pred_task = (

pred_op(

host_name=isvc_name,

input_request=input_req,

cookie=cookie,

url=ingress_gateway,

model=model,

inference_type="infer",

).after(sleep_task).set_display_name("Prediction")

)

(

pred_op(

host_name=isvc_name,

input_request=input_req,

cookie=cookie,

url=ingress_gateway,

model=model,

inference_type="explain",

).after(pred_task).set_display_name("Explanation")

)

dsl.get_pipeline_conf().add_op_transformer(

use_k8s_secret(

secret_name="mlpipeline-minio-artifact",

k8s_secret_key_to_env={

"secretkey": "MINIO_SECRET_KEY",

"accesskey": "MINIO_ACCESS_KEY",

},

)

)Compile the pipeline

compiler.Compiler().compile(pytorch_cifar10, 'pytorch.tar.gz', type_check=True)And start a run of the pipeline

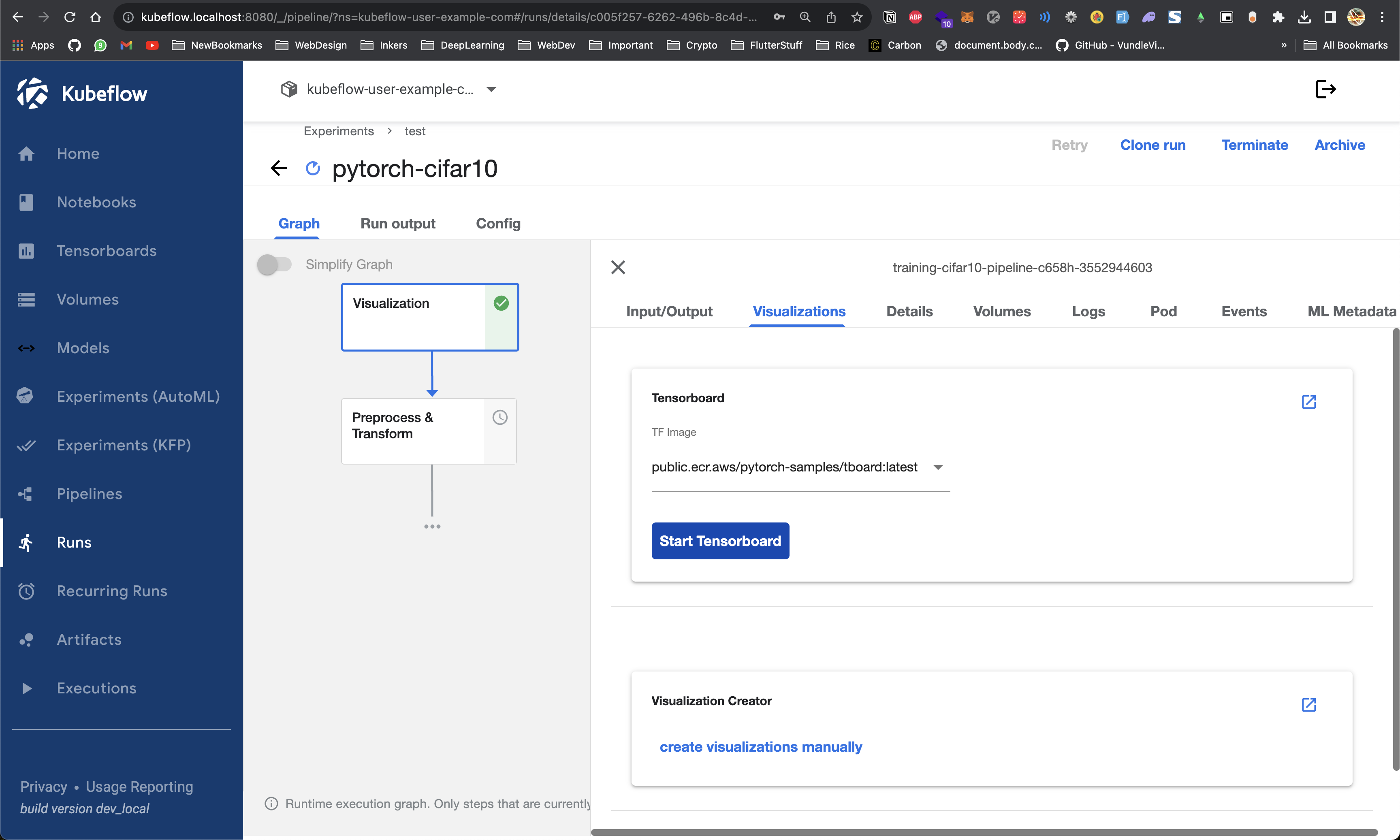

run = client.run_pipeline(my_experiment.id, 'pytorch-cifar10', 'pytorch.tar.gz')Let’s try to understand what’s going on

Tensorboard Output type

https://www.kubeflow.org/docs/components/pipelines/v1/sdk/output-viewer/#tensorboard

name: Create Tensorboard visualization

description: |

Pre-creates Tensorboard visualization for a given Log dir URI.

This way the Tensorboard can be viewed before the training completes.

The output Log dir URI should be passed to a trainer component that will write Tensorboard logs to that directory.

inputs:

- {name: Log dir URI, description: 'Tensorboard log path'}

- {name: Image, default: '', description: 'Tensorboard docker image'}

- {name: Pod Template Spec, default: 'null', description: 'Pod template specification'}

outputs:

- {name: Log dir URI, description: 'Tensorboard log output'}

- {name: MLPipeline UI Metadata, description: 'MLPipeline UI Metadata output'}

implementation:

container:

image: public.ecr.aws/pytorch-samples/alpine:latest

command:

- sh

- -ex

- -c

- |

log_dir="$0"

output_log_dir_path="$1"

output_metadata_path="$2"

pod_template_spec="$3"

image="$4"

mkdir -p "$(dirname "$output_log_dir_path")"

mkdir -p "$(dirname "$output_metadata_path")"

echo "$log_dir" > "$output_log_dir_path"

echo '

{

"outputs" : [{

"type": "tensorboard",

"source": "'"$log_dir"'",

"image": "'"$image"'",

"pod_template_spec": '"$pod_template_spec"'

}]

}

' >"$output_metadata_path"

- {inputValue: Log dir URI}

- {outputPath: Log dir URI}

- {outputPath: MLPipeline UI Metadata}

- {inputValue: Pod Template Spec}

- {inputValue: Image}

❯ kgpo -n kubeflow-user-example-com

NAME READY STATUS RESTARTS AGE

ml-pipeline-ui-artifact-6cb7b9f6fd-ls6nr 2/2 Running 0 45m

ml-pipeline-visualizationserver-7b5889796d-tthgs 2/2 Running 0 45m

test-0 2/2 Running 0 12m

training-cifar10-pipeline-dh2wf-1180343131 2/2 Running 0 6m44s

training-cifar10-pipeline-dh2wf-4094479202 0/2 Completed 0 7m21s

Here’s the Training Step Source Code

# !/usr/bin/env/python3

# Copyright (c) Facebook, Inc. and its affiliates.

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""Cifar10 training script."""

import os

import json

from pathlib import Path

from argparse import ArgumentParser

from pytorch_lightning.loggers import TensorBoardLogger

from pytorch_lightning.callbacks import (

EarlyStopping,

LearningRateMonitor,

ModelCheckpoint,

)

from pytorch_kfp_components.components.visualization.component import (

Visualization,

)

from pytorch_kfp_components.components.trainer.component import Trainer

from pytorch_kfp_components.components.mar.component import MarGeneration

from pytorch_kfp_components.components.utils.argument_parsing import (

parse_input_args,

)

# Argument parser for user defined paths

parser = ArgumentParser()

parser.add_argument(

"--tensorboard_root",

type=str,

default="output/tensorboard",

help="Tensorboard Root path (default: output/tensorboard)",

)

parser.add_argument(

"--checkpoint_dir",

type=str,

default="output/train/models",

help="Path to save model checkpoints (default: output/train/models)",

)

parser.add_argument(

"--dataset_path",

type=str,

default="output/processing",

help="Cifar10 Dataset path (default: output/processing)",

)

parser.add_argument(

"--model_name",

type=str,

default="resnet.pth",

help="Name of the model to be saved as (default: resnet.pth)",

)

parser.add_argument(

"--mlpipeline_ui_metadata",

default="mlpipeline-ui-metadata.json",

type=str,

help="Path to write mlpipeline-ui-metadata.json",

)

parser.add_argument(

"--mlpipeline_metrics",

default="mlpipeline-metrics.json",

type=str,

help="Path to write mlpipeline-metrics.json",

)

parser.add_argument(

"--script_args",

type=str,

help="Arguments for bert agnews classification script",

)

parser.add_argument(

"--ptl_args", type=str, help="Arguments specific to PTL trainer"

)

parser.add_argument("--trial_id", default=0, type=int, help="Trial id")

parser.add_argument(

"--model_params",

default=None,

type=str,

help="Model parameters for trainer"

)

parser.add_argument(

"--results", default="results.json", type=str, help="Training results"

)

# parser = pl.Trainer.add_argparse_args(parent_parser=parser)

args = vars(parser.parse_args())

script_args = args["script_args"]

ptl_args = args["ptl_args"]

trial_id = args["trial_id"]

TENSORBOARD_ROOT = args["tensorboard_root"]

CHECKPOINT_DIR = args["checkpoint_dir"]

DATASET_PATH = args["dataset_path"]

script_dict: dict = parse_input_args(input_str=script_args)

script_dict["checkpoint_dir"] = CHECKPOINT_DIR

ptl_dict: dict = parse_input_args(input_str=ptl_args)

# Enabling Tensorboard Logger, ModelCheckpoint, Earlystopping

lr_logger = LearningRateMonitor()

tboard = TensorBoardLogger(TENSORBOARD_ROOT, log_graph=True)

early_stopping = EarlyStopping(

monitor="val_loss", mode="min", patience=5, verbose=True

)

checkpoint_callback = ModelCheckpoint(

dirpath=CHECKPOINT_DIR,

filename="cifar10_{epoch:02d}",

save_top_k=1,

verbose=True,

monitor="val_loss",

mode="min",

)

if "accelerator" in ptl_dict and ptl_dict["accelerator"] == "None":

ptl_dict["accelerator"] = None

# Setting the trainer specific arguments

trainer_args = {

"logger": tboard,

"checkpoint_callback": True,

"callbacks": [lr_logger, early_stopping, checkpoint_callback],

}

if not ptl_dict["max_epochs"]:

trainer_args["max_epochs"] = 1

else:

trainer_args["max_epochs"] = ptl_dict["max_epochs"]

if "profiler" in ptl_dict and ptl_dict["profiler"] != "":

trainer_args["profiler"] = ptl_dict["profiler"]

# Setting the datamodule specific arguments

data_module_args = {"train_glob": DATASET_PATH}

# Creating parent directories

Path(TENSORBOARD_ROOT).mkdir(parents=True, exist_ok=True)

Path(CHECKPOINT_DIR).mkdir(parents=True, exist_ok=True)

# Updating all the input parameter to PTL dict

trainer_args.update(ptl_dict)

if "model_params" in args and args["model_params"] is not None:

args.update(json.loads(args["model_params"]))

# Initiating the training process

trainer = Trainer(

module_file="cifar10_train.py",

data_module_file="cifar10_datamodule.py",

module_file_args=args,

data_module_args=data_module_args,

trainer_args=trainer_args,

)

model = trainer.ptl_trainer.lightning_module

if trainer.ptl_trainer.global_rank == 0:

# Mar file generation

cifar_dir, _ = os.path.split(os.path.abspath(__file__))

mar_config = {

"MODEL_NAME":

"cifar10_test",

"MODEL_FILE":

os.path.join(cifar_dir, "cifar10_train.py"),

"HANDLER":

os.path.join(cifar_dir, "cifar10_handler.py"),

"SERIALIZED_FILE":

os.path.join(CHECKPOINT_DIR, script_dict["model_name"]),

"VERSION":

"1",

"EXPORT_PATH":

CHECKPOINT_DIR,

"CONFIG_PROPERTIES":

os.path.join(cifar_dir, "config.properties"),

"EXTRA_FILES":

"{},{}".format(

os.path.join(cifar_dir, "class_mapping.json"),

os.path.join(cifar_dir, "classifier.py"),

),

"REQUIREMENTS_FILE":

os.path.join(cifar_dir, "requirements.txt"),

}

MarGeneration(mar_config=mar_config, mar_save_path=CHECKPOINT_DIR)

classes = [

"airplane",

"automobile",

"bird",

"cat",

"deer",

"dog",

"frog",

"horse",

"ship",

"truck",

]

# print(dir(trainer.ptl_trainer.model.module))

# model = trainer.ptl_trainer.model

target_index_list = list(set(model.target))

class_list = []

for index in target_index_list:

class_list.append(classes[index])

confusion_matrix_dict = {

"actuals": model.target,

"preds": model.preds,

"classes": class_list,

"url": script_dict["confusion_matrix_url"],

}

test_accuracy = round(float(model.test_acc.compute()), 2)

print("Model test accuracy: ", test_accuracy)

if "model_params" in args and args["model_params"] is not None:

data = {}

data[trial_id] = test_accuracy

Path(os.path.dirname(args["results"])).mkdir(

parents=True, exist_ok=True

)

results_file = Path(args["results"])

if results_file.is_file():

with open(results_file, "r") as fp:

old_data = json.loads(fp.read())

data.update(old_data)

with open(results_file, "w") as fp:

fp.write(json.dumps(data))

visualization_arguments = {

"input": {

"tensorboard_root": TENSORBOARD_ROOT,

"checkpoint_dir": CHECKPOINT_DIR,

"dataset_path": DATASET_PATH,

"model_name": script_dict["model_name"],

"confusion_matrix_url": script_dict["confusion_matrix_url"],

},

"output": {

"mlpipeline_ui_metadata": args["mlpipeline_ui_metadata"],

"mlpipeline_metrics": args["mlpipeline_metrics"],

},

}

markdown_dict = {"storage": "inline", "source": visualization_arguments}

print("Visualization Arguments: ", markdown_dict)

visualization = Visualization(

test_accuracy=test_accuracy,

confusion_matrix_dict=confusion_matrix_dict,

mlpipeline_ui_metadata=args["mlpipeline_ui_metadata"],

mlpipeline_metrics=args["mlpipeline_metrics"],

markdown=markdown_dict,

)

checpoint_dir_contents = os.listdir(CHECKPOINT_DIR)

print(f"Checkpoint Directory Contents: {checpoint_dir_contents}")Which is defined in the pipeline as

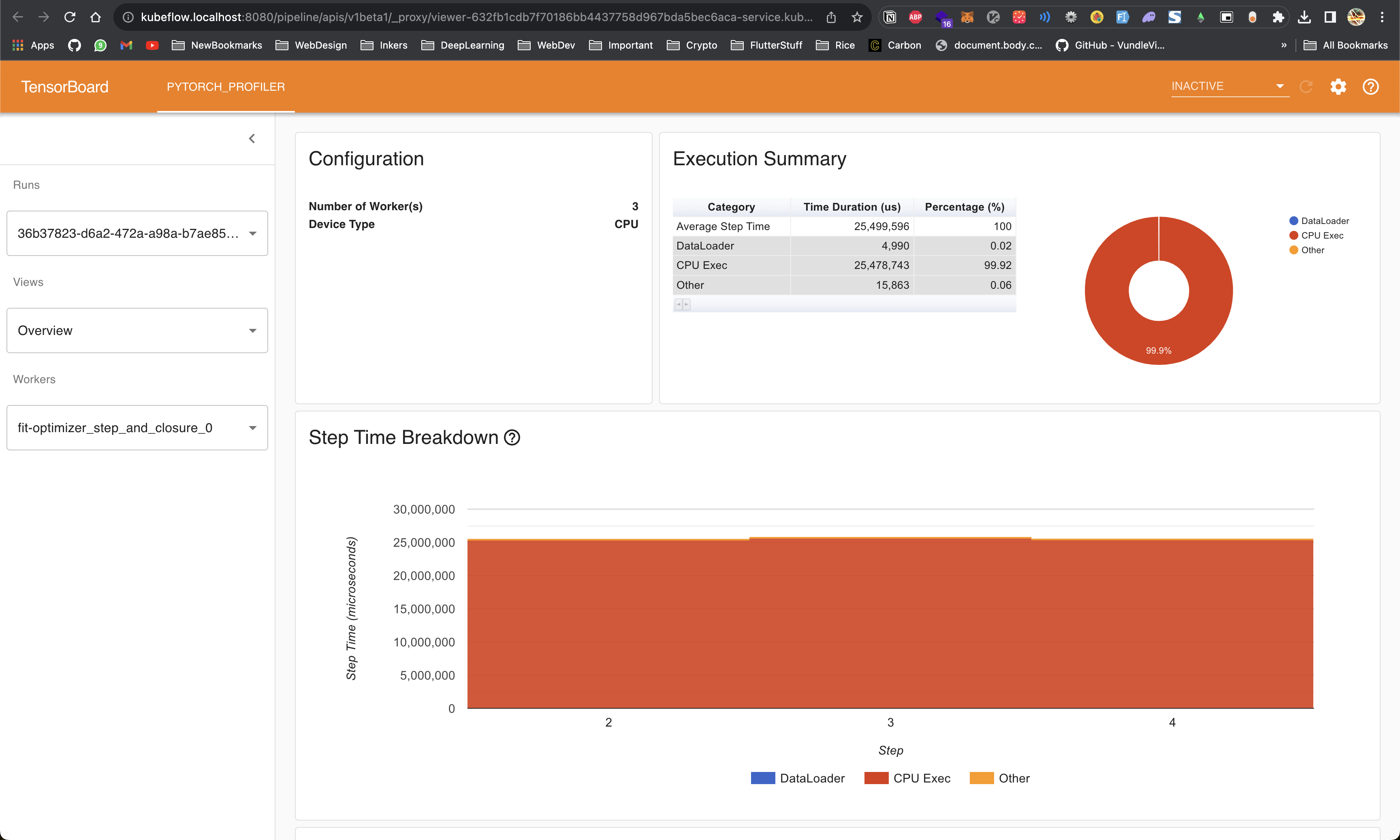

ptl_args = f"max_epochs=1, devices=0, strategy=None, profiler=pytorch, accelerator=auto"

train_task = (

train_op(

input_data=prep_task.outputs["output_data"],

script_args=script_args,

ptl_arguments=ptl_args

).after(prep_task).set_display_name("Training")

# For allocating resources, uncomment below lines

# .set_memory_request('600M')

# .set_memory_limit('1200M')

# .set_cpu_request('700m')

# .set_cpu_limit('1400m')

# For GPU uncomment below line and set GPU limit and node selector

# .set_gpu_limit(1).add_node_selector_constraint('cloud.google.com/gke-accelerator','nvidia-tesla-p4')

)

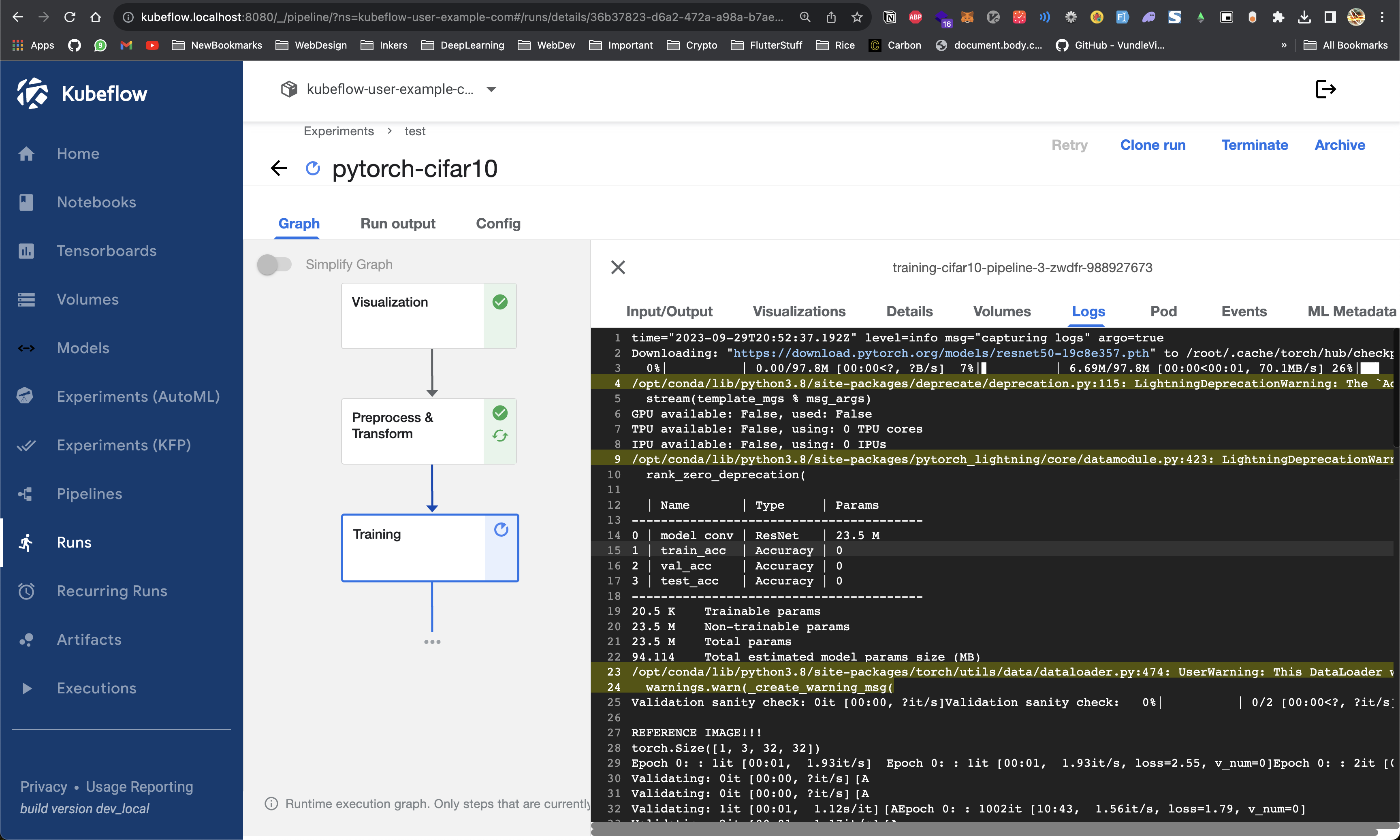

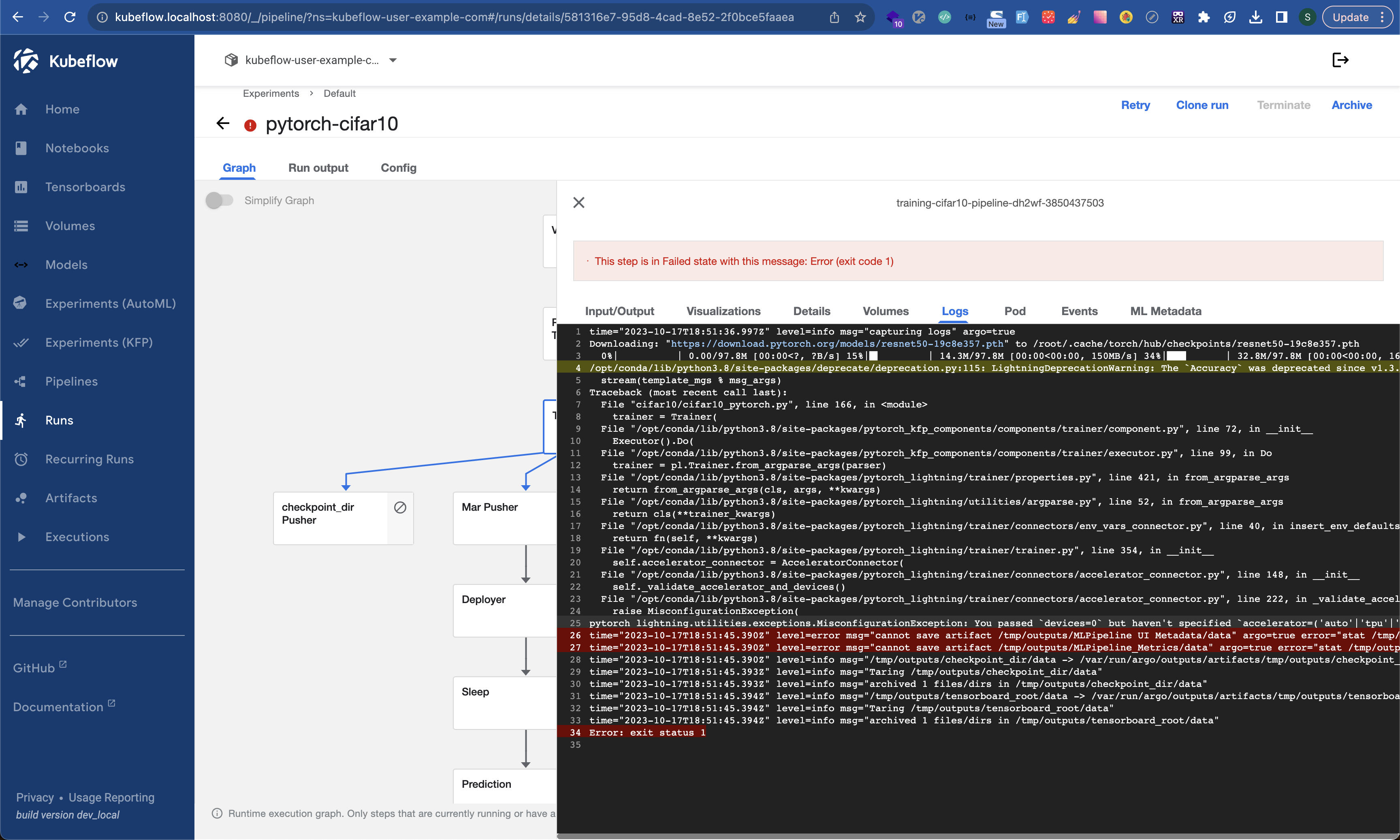

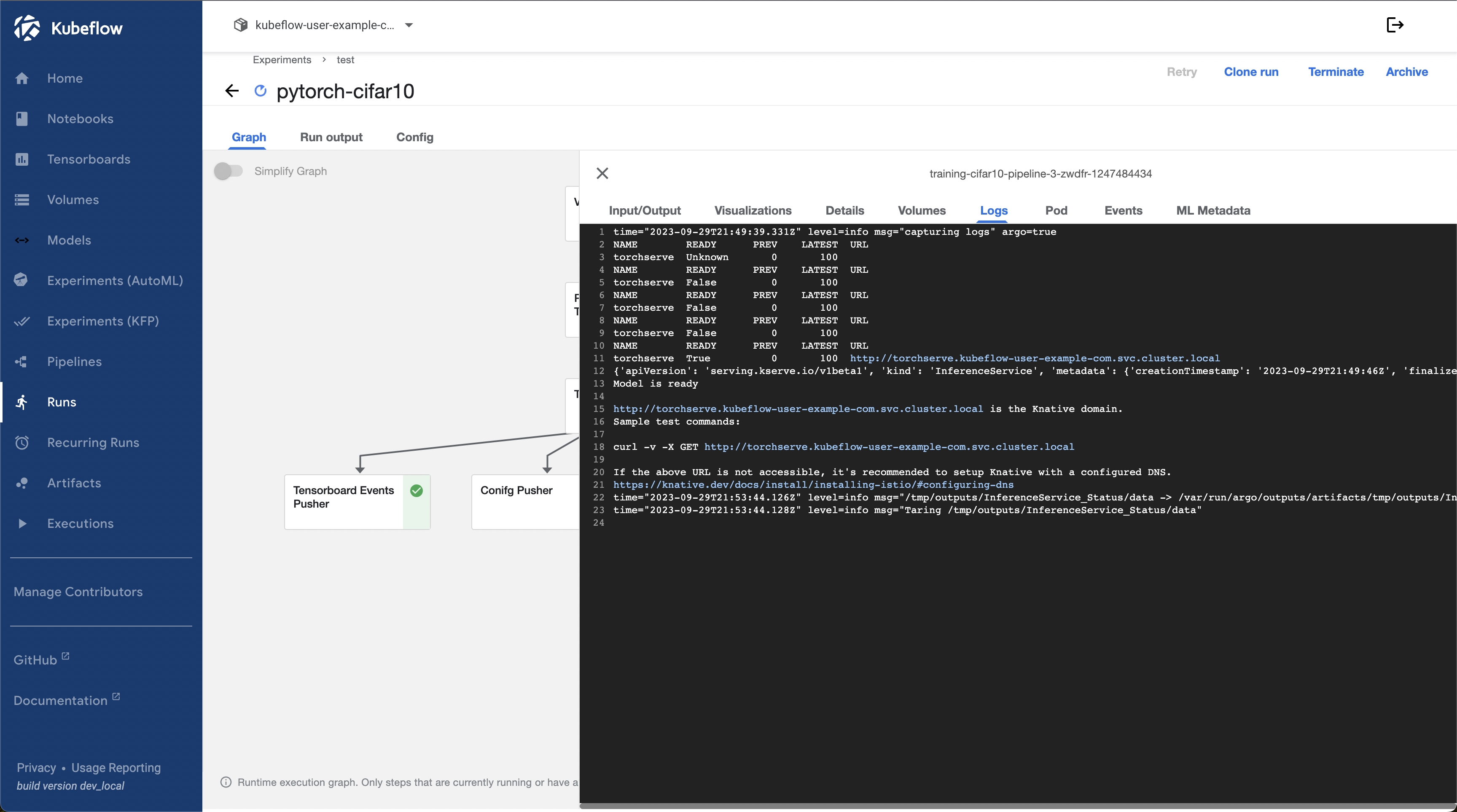

You can also see the Pod logs for the same

klo training-cifar10-pipeline-dh2wf-3850437503 -n kubeflow-user-example-comWe just have to add accelerator=auto to fix it!

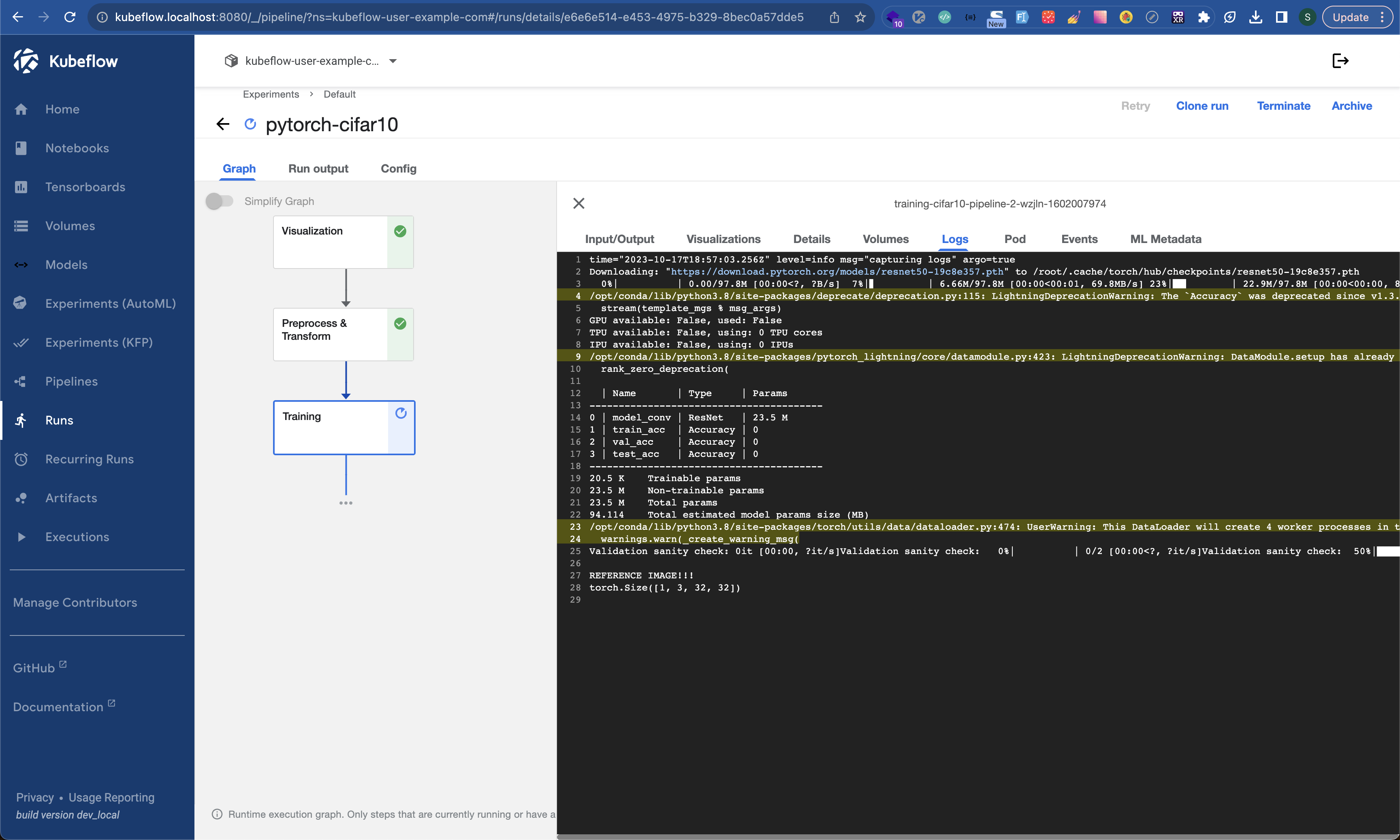

ptl_args = f"max_epochs=1, devices=0, strategy=None, profiler=pytorch, accelerator=auto"Now redeploy the pipeline

Training Started

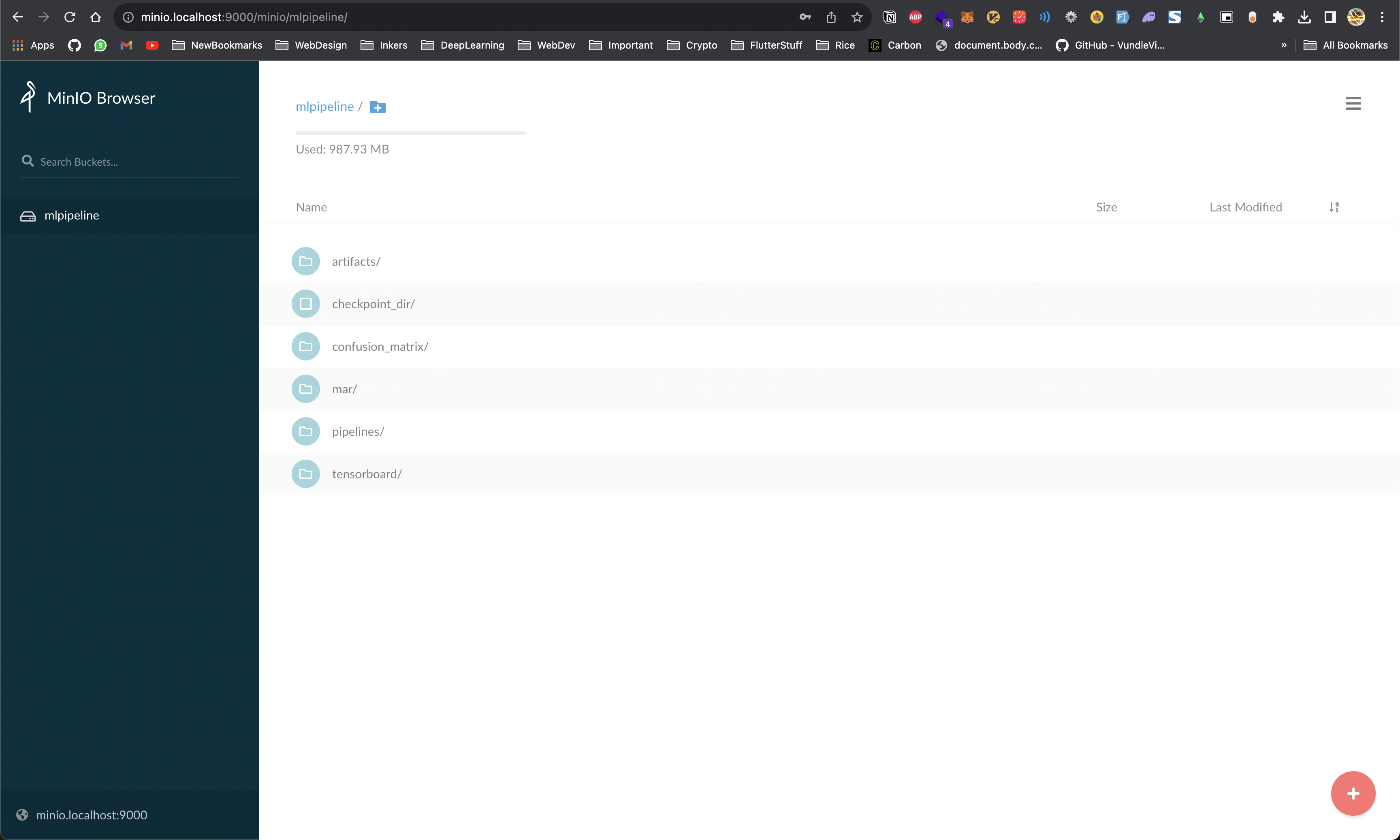

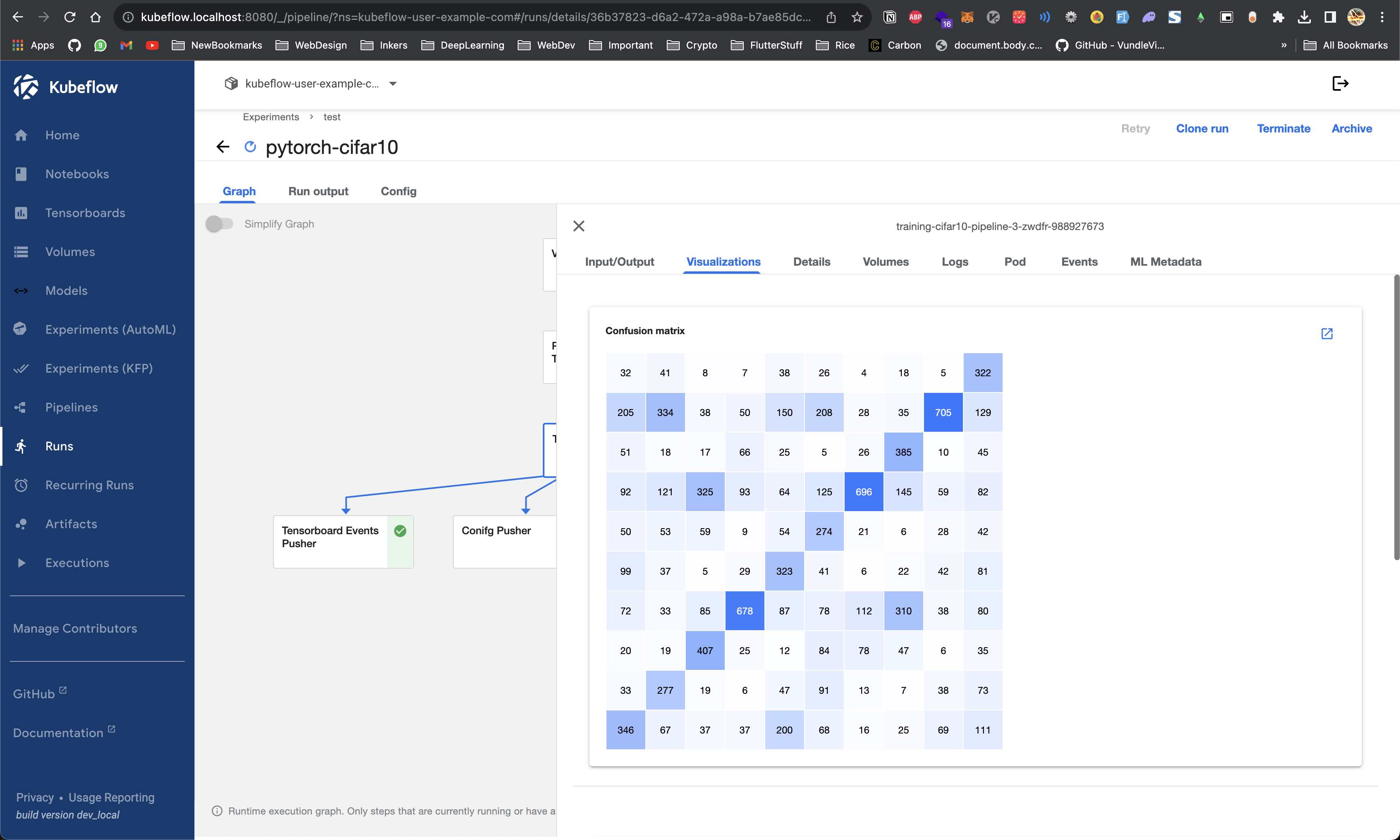

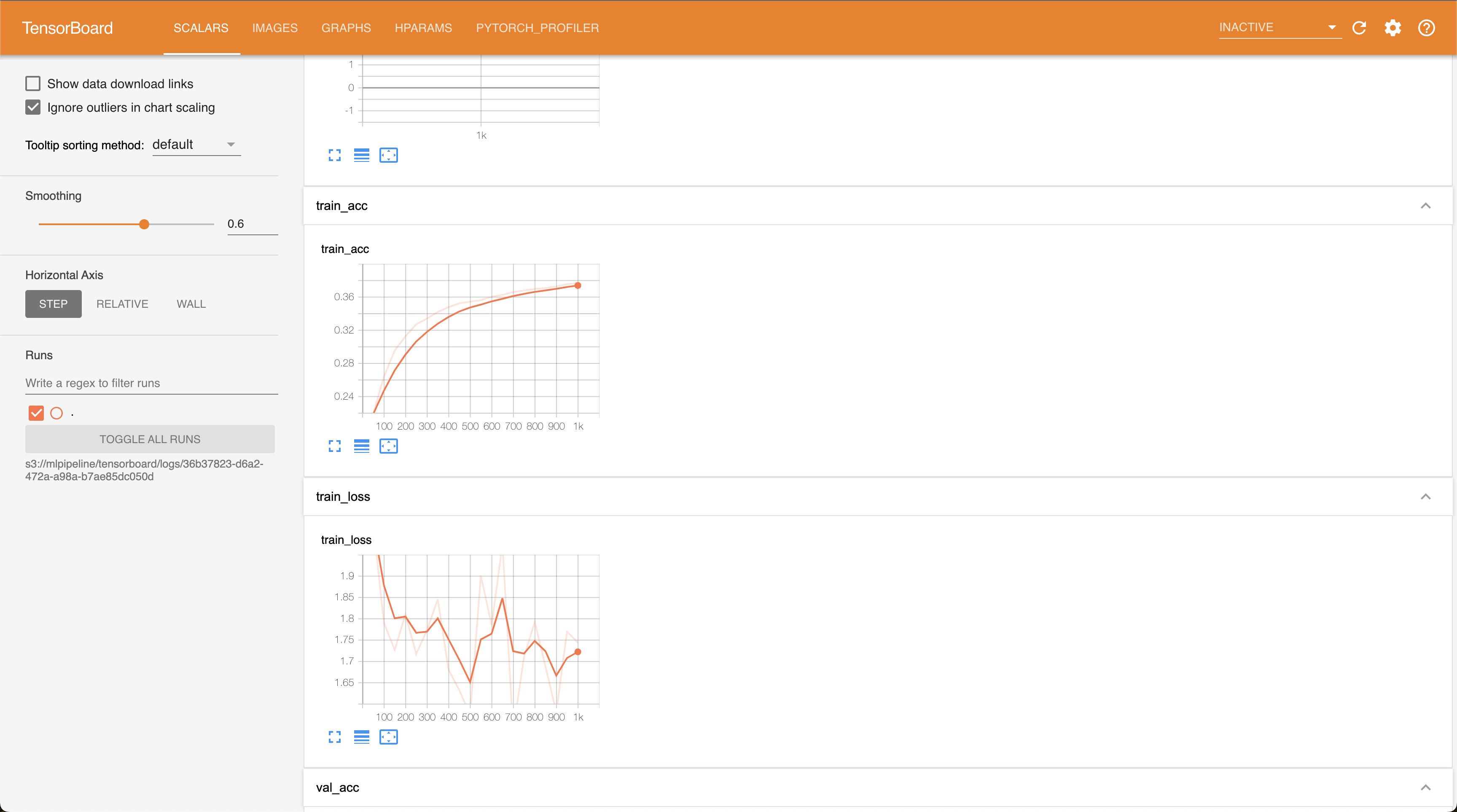

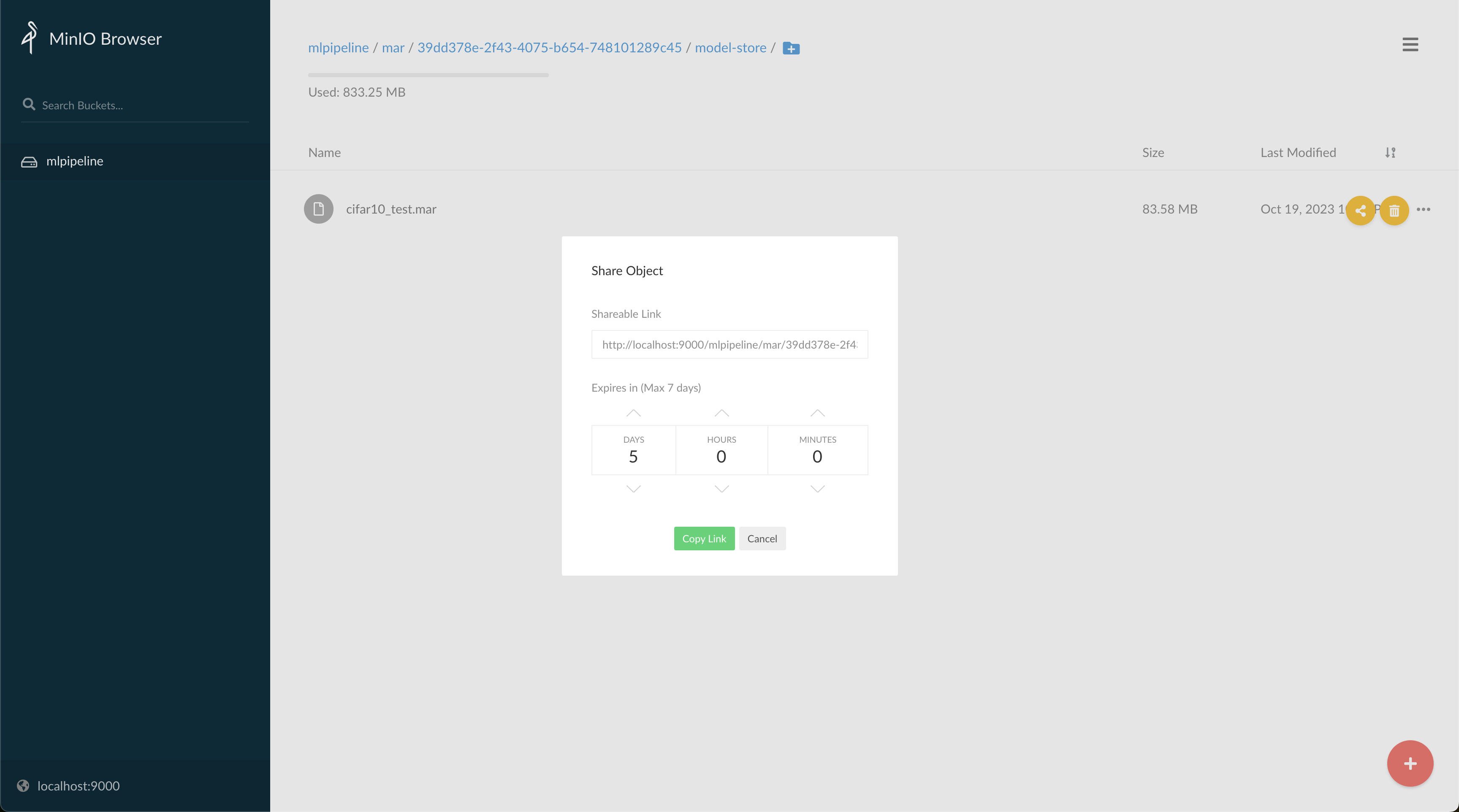

We can see the Artifacts generated in the run in MINIO

kubectl port-forward -n kubeflow svc/minio-service 9000:9000minio

minio123

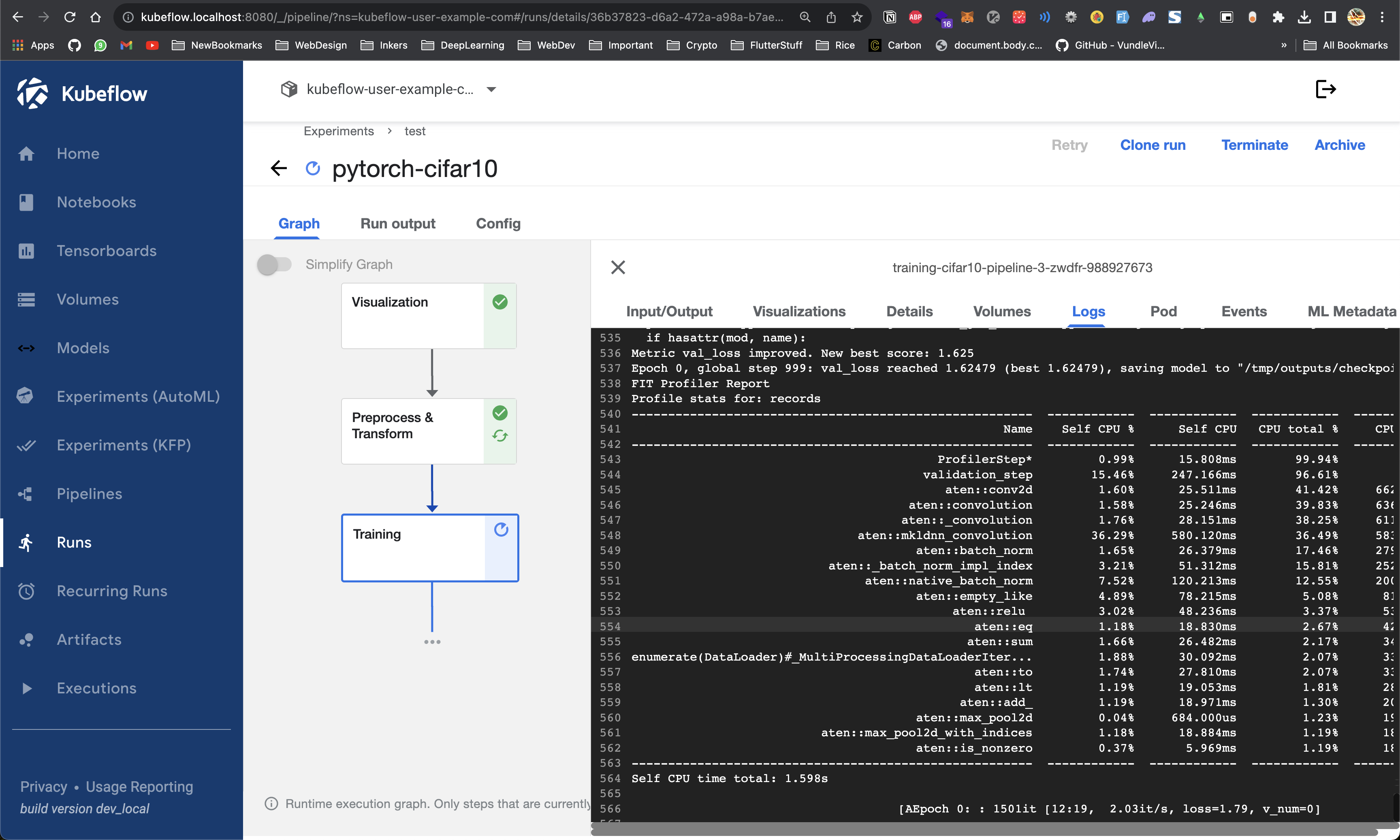

❯ k top pod -n kubeflow-user-example-com

NAME CPU(cores) MEMORY(bytes)

ml-pipeline-ui-artifact-6cb7b9f6fd-5r44w 5m 118Mi

ml-pipeline-visualizationserver-7b5889796d-6mxm6 2m 138Mi

test-notebook-0 5m 606Mi

training-cifar10-pipeline-3-zwdfr-988927673 1102m 1764Mi

viewer-1634c2300b6318814b78b58b7e6a74a106c1a5f6-deploymentvzxnt 8m 466Mi

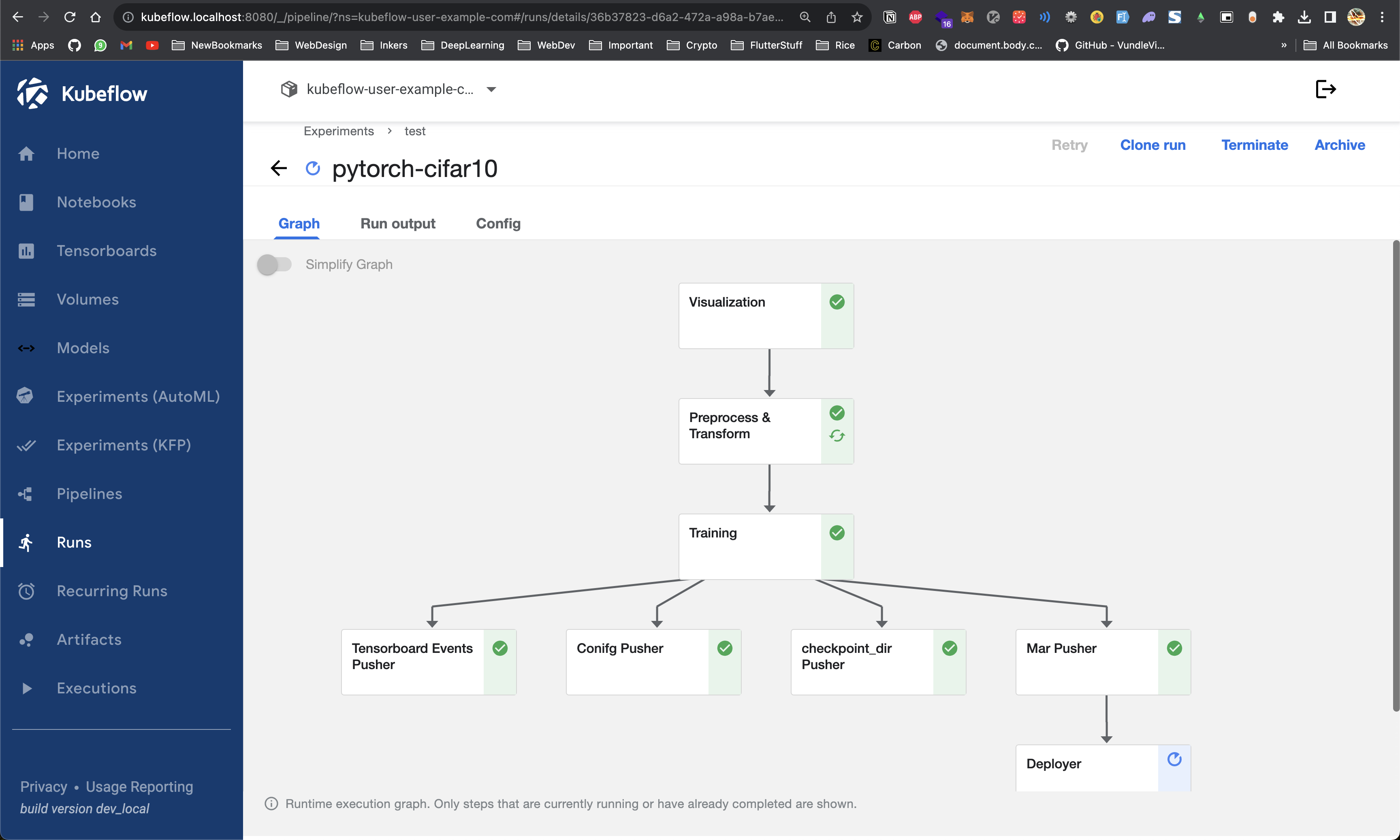

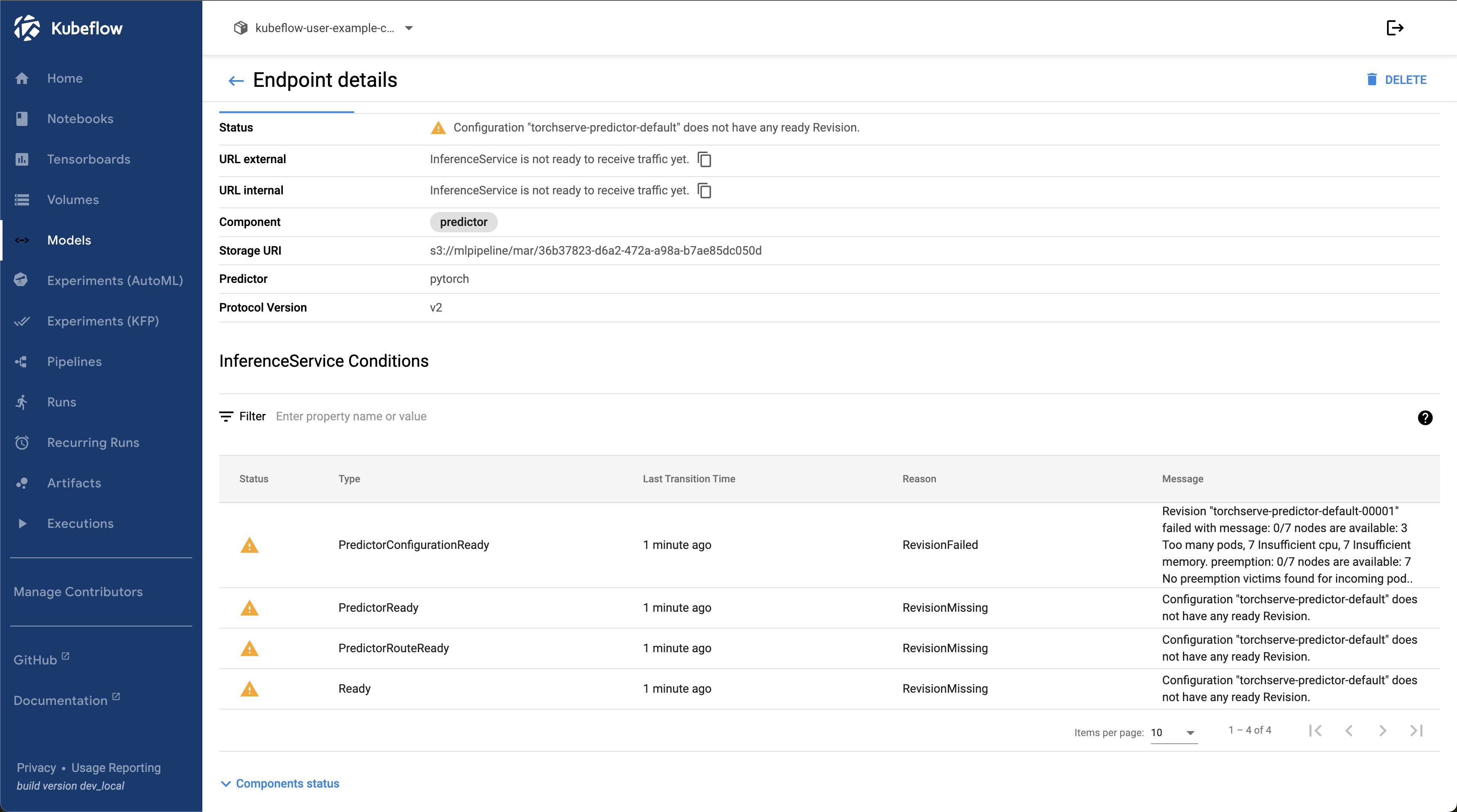

viewer-632fb1cdb7f70186bb4437758d967bda5bec6aca-deploymentjsjjk 7m 369MiOnce the training is done, The model is packaged into .mar file and an KServe Inference Service is created

Troubleshoot Steps

- First check logs of the Pods is something is erroring out during execution

- Describe Pods to check why its not getting scheduled

- Check Logs for Replicaset to see why its not able to schedule

- Delete Pod to restart a service

- Check Kubeflow Model Deployment logs to see whats going wrong

- If caching is the problem you can always start a pipeline run without cache by just setting

enable_caching=False

run = client.run_pipeline(my_experiment.id, 'pytorch-cifar10-new-new', 'pytorch.tar.gz', enable_caching=False)Create a Node with High Memory

- name: ng-spot-large

instanceType: t3a.2xlarge

desiredCapacity: 1

ssh:

allow: true # will use ~/.ssh/id_rsa.pub as the default ssh key

iam:

withAddonPolicies:

autoScaler: true

ebs: true

efs: true

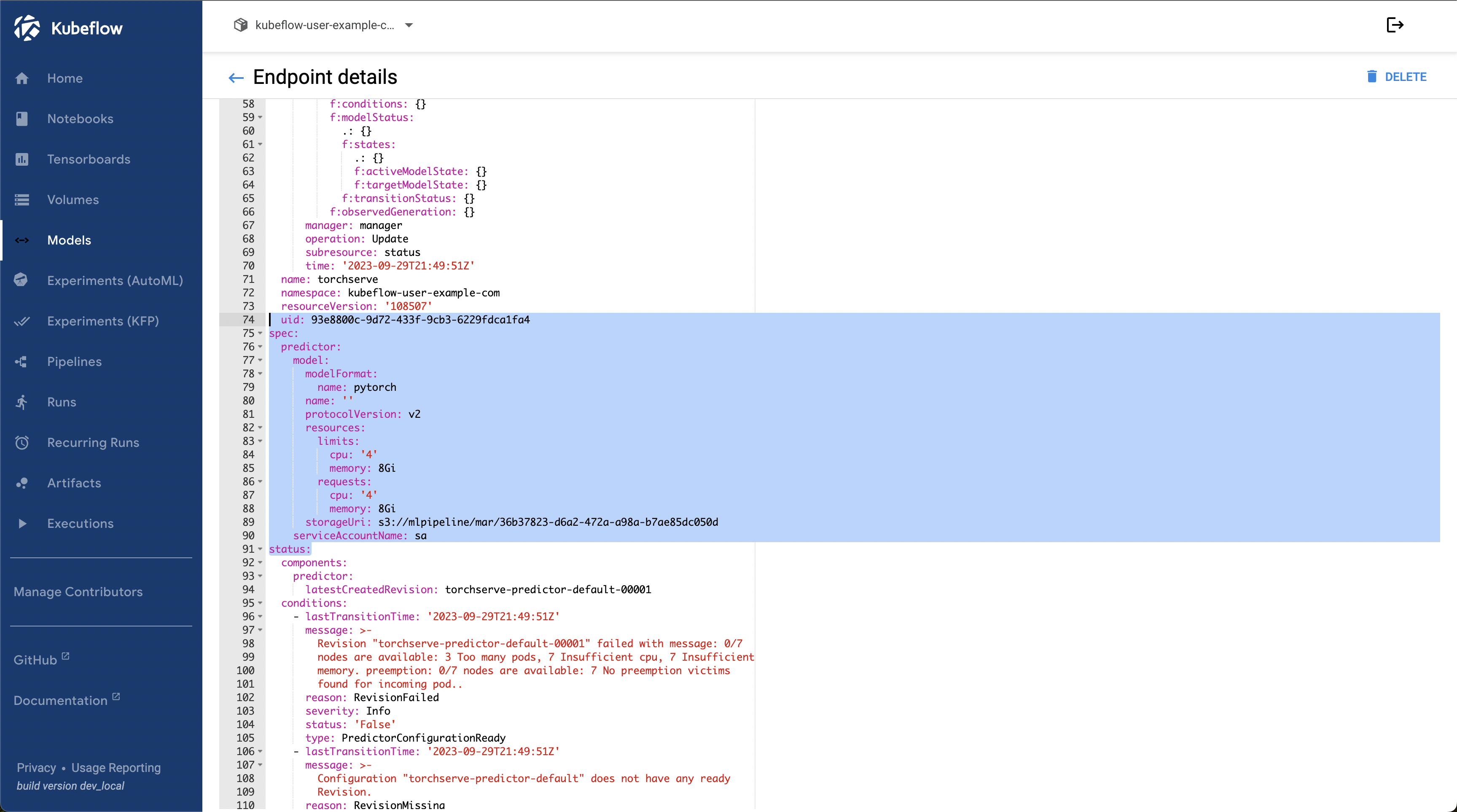

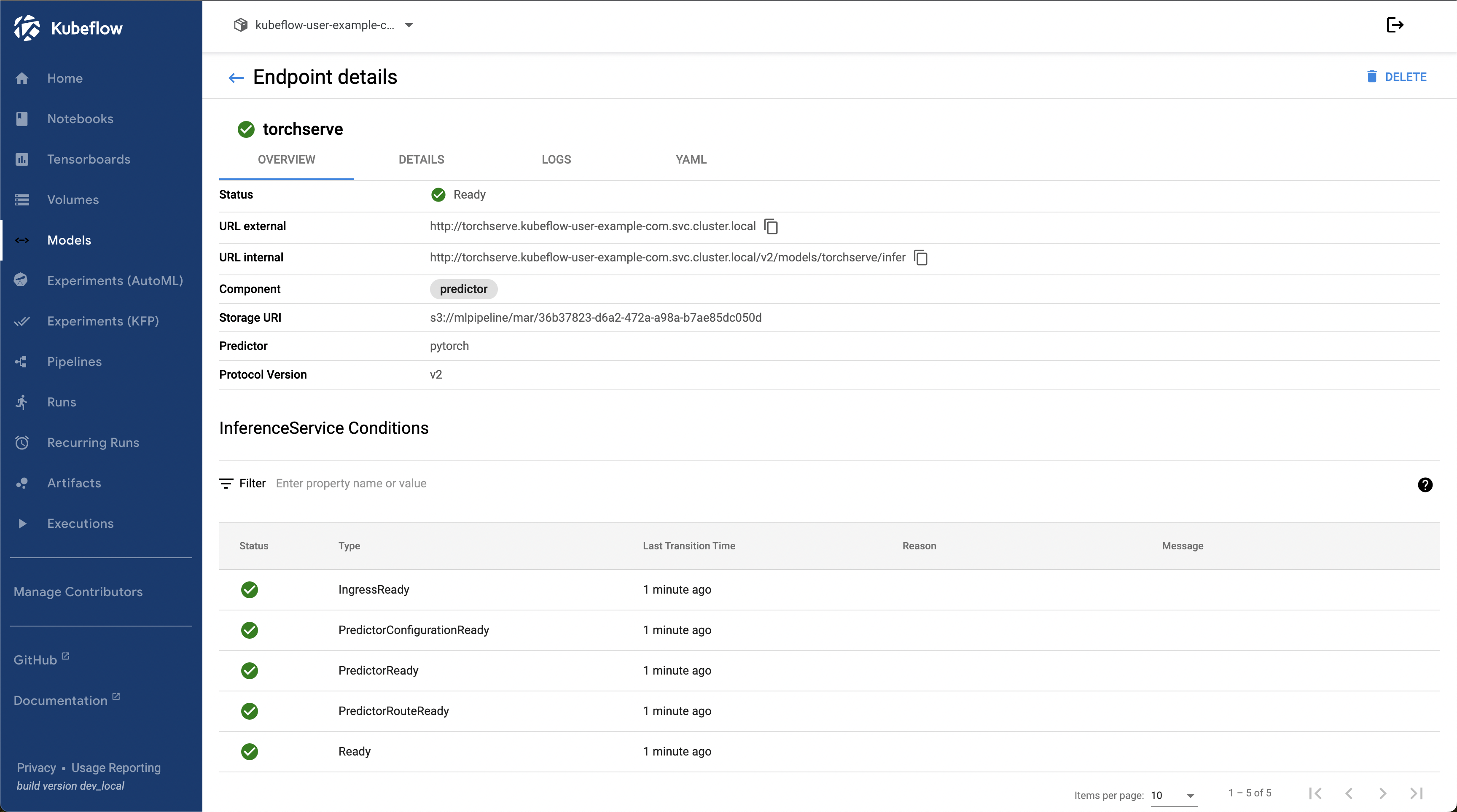

awsLoadBalancerController: trueModel should be deployed now!

Warning FailedScheduling 2m34s (x2 over 7m34s) default-scheduler 0/6 nodes are available: 6 Insufficient cpu, 6 Insufficient memory. preemption: 0/6 nodes are available: 6 No preemption victims found for incoming pod.

Normal Scheduled 116s default-scheduler Successfully assigned kubeflow-user-example-com/torchserve-predictor-default-00001-deployment-75b9977f84-qdf2h to ip-192-168-52-227.ap-south-1.compute.internal

Normal Pulling 115s kubelet Pulling image "kserve/storage-initializer:v0.10.0"

Normal Pulled 100s kubelet Successfully pulled image "kserve/storage-initializer:v0.10.0" in 15.186598864s (15.186607495s including waiting)

k logs torchserve-predictor-default-00002-deployment-56f9769c54-sj95f -n kubeflow-user-example-com😅 This will still not work because of two reasons

- requirements.txt in the MAR file does not have versions mentioned, which leads to installing the latest pytorch and latest pytorch lightning

- config.properties is wrong, which still uses the old kfserve requests envelope

We need to path both of these in our MAR file to make it work!

We can always exec inside our inference service pod to check the config.properties and requirements.txt bundled in the .MAR file

But here’s the correct ones

config.properties

inference_address=http://0.0.0.0:8085

management_address=http://0.0.0.0:8081

metrics_address=http://0.0.0.0:8082

enable_metrics_api=true

metrics_format=prometheus

install_py_dep_per_model=true

number_of_netty_threads=4

job_queue_size=10

service_envelope=kservev2

max_response_size = 655350000

model_store=/mnt/models/model-store

model_snapshot={"name":"startup.cfg","modelCount":1,"models":{"cifar10":{"1.0":{"defaultVersion":true,"marName":"cifar10_test.mar","minWorkers":1,"maxWorkers":5,"batchSize":1,"maxBatchDelay":5000,"responseTimeout":900}}}}The service_envelope is correctly set to kservev2

Also here’s the right requirements.txt

requirements.txt

--find-links https://download.pytorch.org/whl/cu117

torch==1.13.0

torchvision

pytorch-lightning==1.9.0

scikit-learn

captumBut how are we going to patch this in our pipeline?

🥲 Recreate the MAR File

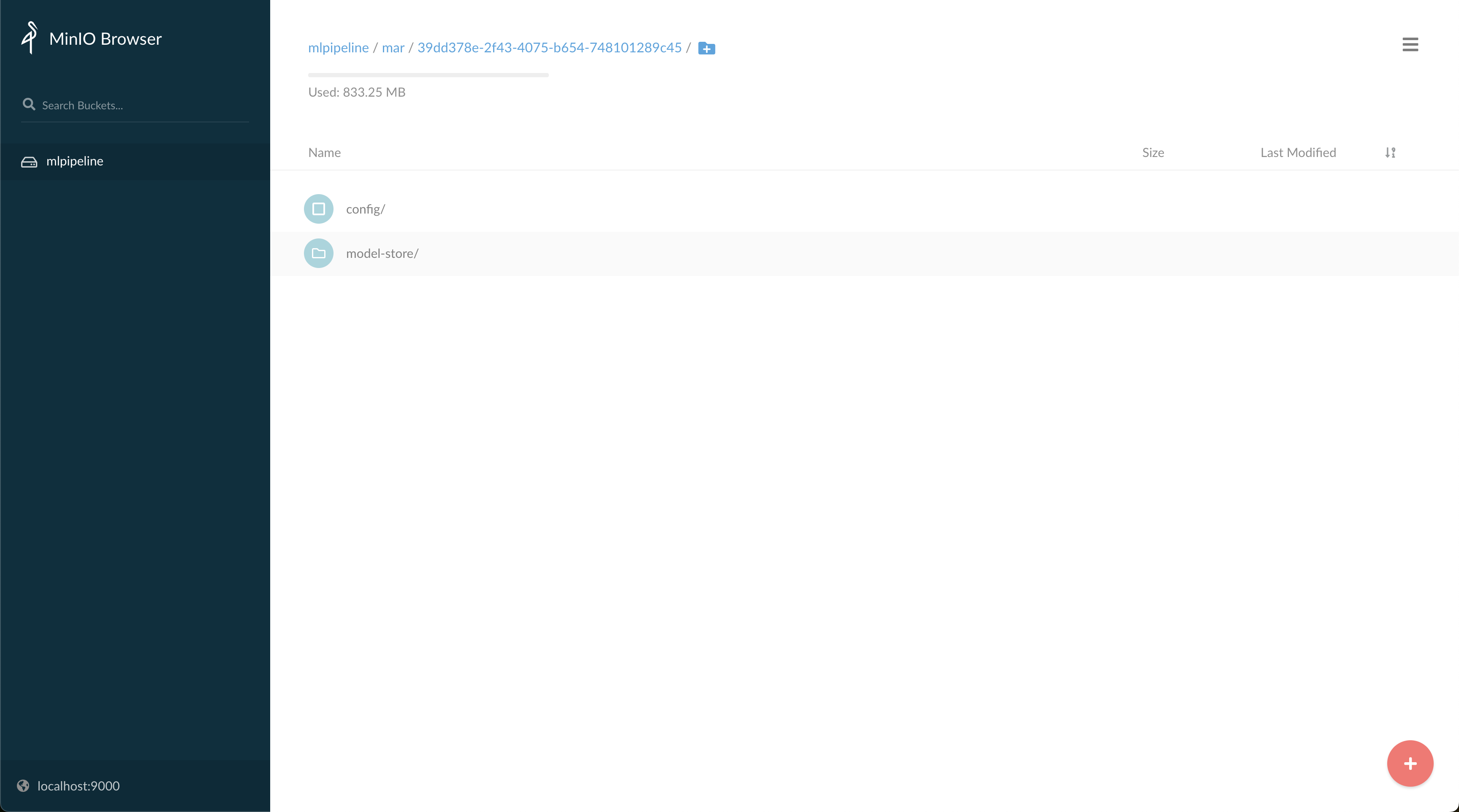

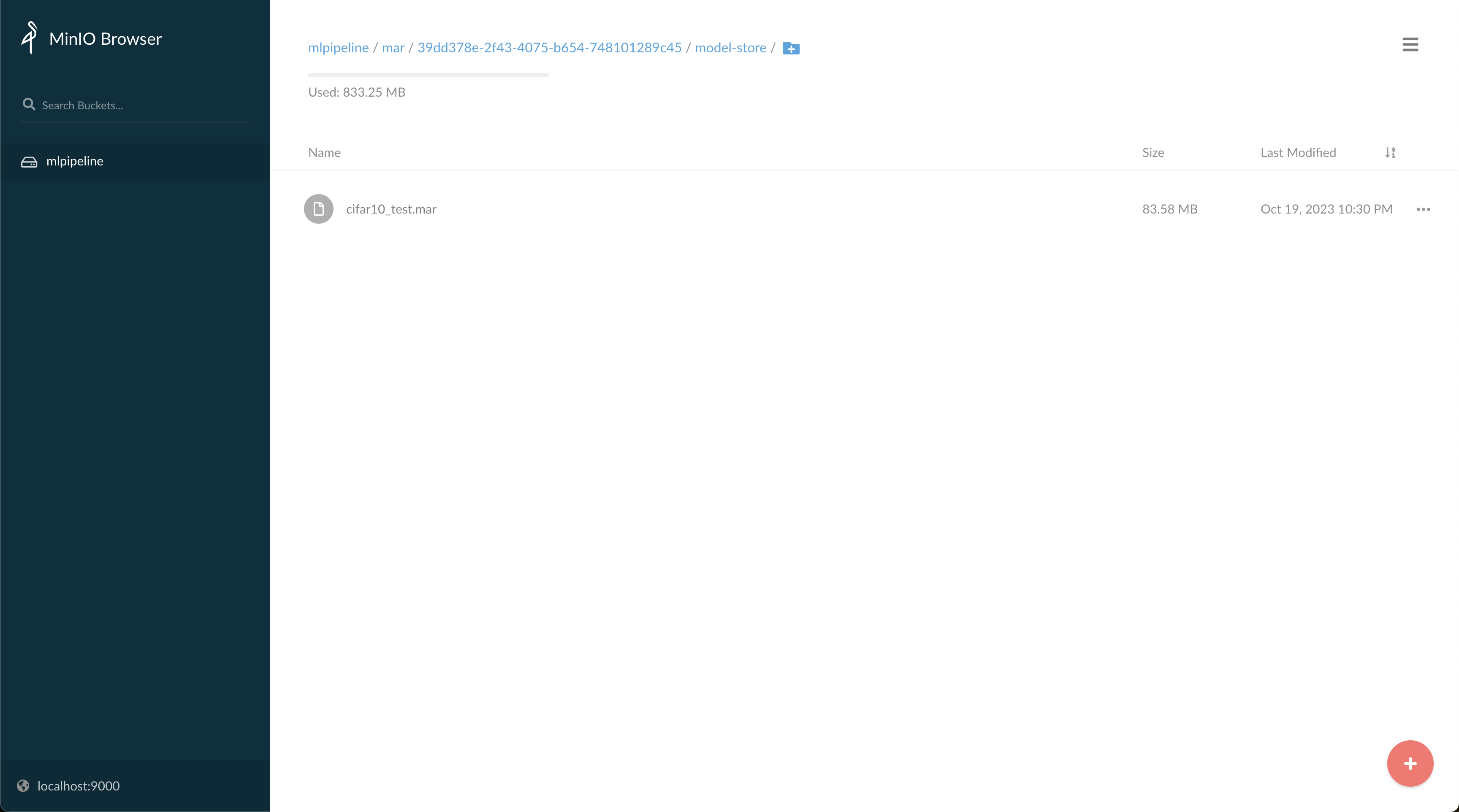

First we need to get the existing .MAR file and config.properties

kubectl port-forward -n kubeflow svc/minio-service 9000:9000accessKey: minio

secretKey: minio123

config folder has the config.properties and model-store has the .mar file

Download the .mar file

The .mar file is simply a .zip file with some extra metadata in a folder

So we can take this .mar to an instance and fiddle with it

cp cifar10_test.mar cifar10_test.zip

unzip cifar10_test.zipNow you can make changes in this, patch the files and recreate the .mar file

docker run -it --rm --shm-size=1g --ulimit memlock=-1 --ulimit stack=67108864 -v `pwd`:/opt/src pytorch/torchserve:0.7.0-cpu bashtorch-model-archiver --model-name cifar10_test --version 1 --handler cifar10_handle

r.py --model-file cifar10_train.py --serialized-file resnet.pth --extra-files class_mapping.json,classifier.py --requirements-f

ile requirements.txtOnce done, replace the .mar file in MINIO with this patched file

Similarly patch the config.properties in MINIO

So, now simply delete the inference pod and another pod will pickup the new config

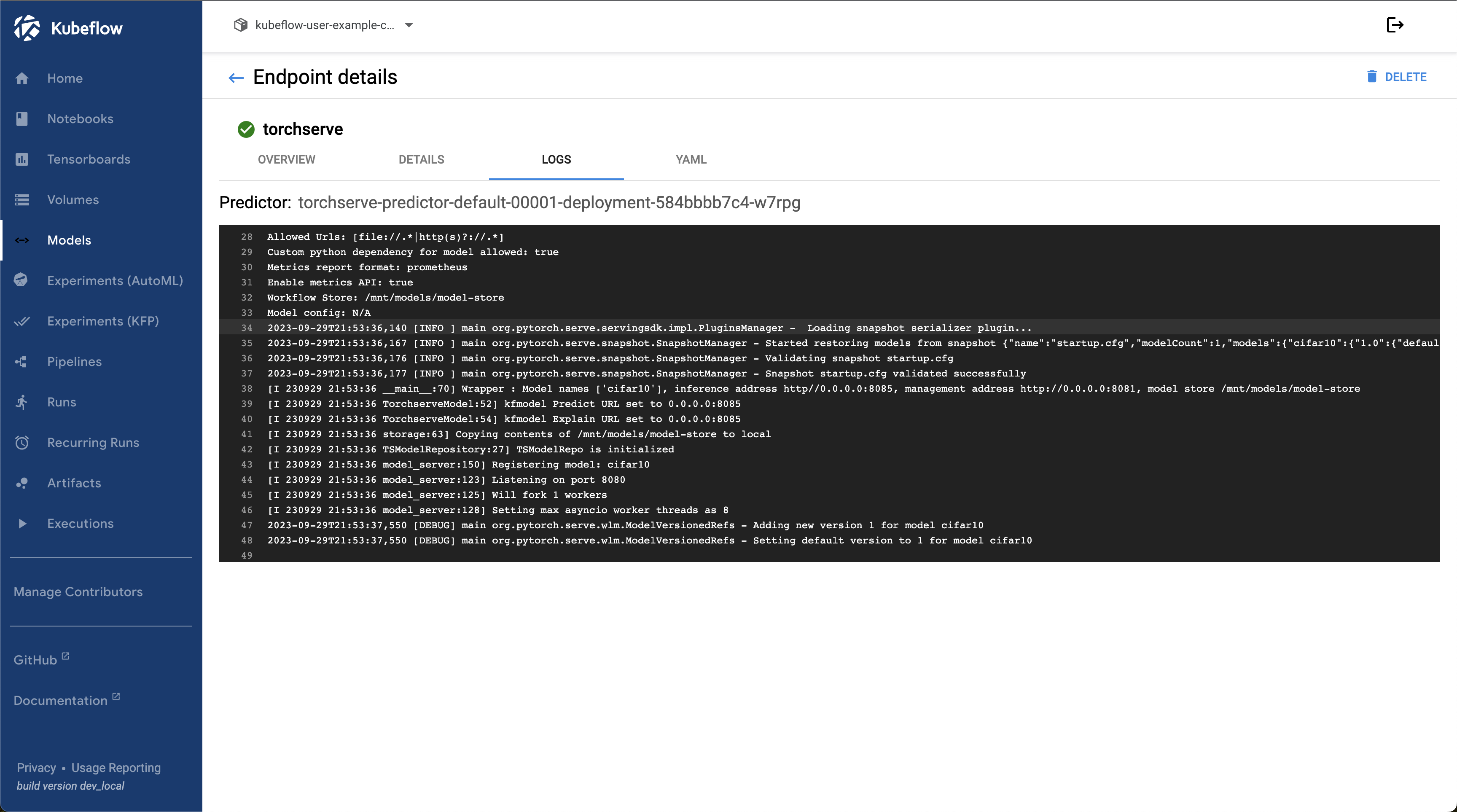

k delete pod torchserve-predictor-default-00001-deployment-6f6dd97f88-8clgj -n kubeflow-user-example-comMonitor Logs of the Inference Service to see if it loaded successfully

2023-10-19T17:11:56,590 [WARN ] W-9000-cifar10_1-stderr MODEL_LOG - 95%|█████████▌| 93.3M/97.8M [00:00<00:00, 246MB/s]

2023-10-19T17:11:56,591 [WARN ] W-9000-cifar10_1-stderr MODEL_LOG - 100%|██████████| 97.8M/97.8M [00:00<00:00, 246MB/s]

2023-10-19T17:11:56,849 [INFO ] W-9000-cifar10_1-stdout MODEL_LOG - CIFAR10 model from path /home/model-server/tmp/models/5453ff5707ed42feb0a7977f9365bc2d loaded successfullyWe can try inference now

!kubectl get isvc $DEPLOYINFERENCE_SERVICE_LIST = ! kubectl get isvc {DEPLOY_NAME} -n {NAMESPACE} -o json | python3 -c "import sys, json; print(json.load(sys.stdin)['status']['url'])"| tr -d '"' | cut -d "/" -f 3

INFERENCE_SERVICE_NAME = INFERENCE_SERVICE_LIST[0]

INFERENCE_SERVICE_NAMEConvert the Image to bytes

!python cifar10/tobytes.py cifar10/kitten.pngCurl our model endpoint

!curl -v -H "Host: $INFERENCE_SERVICE_NAME" -H "Cookie: $COOKIE" "$INGRESS_GATEWAY/v2/models/$MODEL_NAME/infer" -d @./cifar10/kitten.json<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Error</title>

</head>

<body>

<pre>Cannot POST /v2/models/cifar10/infer</pre>

</body>

</html>

So now what?

The default kubeflow doesn’t come with proper dns settings! Which is why Istio is not proxying the request to KServe Endpoint at all! This must be hitting our Kubeflow Frontend endpoint, which is why it says cannot post

Which means we will not be able to access our isvc from istio ingress in the current config

The fix is simple

Patch the Config Domain in KServe

kubectl patch cm config-domain --patch '{"data":{"emlo.tsai":""}}' -n knative-servingThis will suffix our deployments on .emlo.tsai as the domain name

This will change the domain of our ISVC!

kg isvc -n kubeflow-user-example-com

NAME URL READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGE

torchserve http://torchserve.kubeflow-user-example-com.emlo.tsai True 100 torchserve-predictor-default-00001 87mWe can do a curl and check if the models are being listed

!curl -v -H "Host: torchserve.kubeflow-user-example-com.emlo.tsai" \

-H "Cookie: $COOKIE" \

"http://istio-ingressgateway.istio-system.svc.cluster.local/v2/models"Now we can perform inference!

MODEL_NAME="cifar10"INGRESS_GATEWAY='http://istio-ingressgateway.istio-system.svc.cluster.local'INFERENCE_SERVICE_LIST = ! kubectl get isvc {DEPLOY_NAME} -n {NAMESPACE} -o json | python3 -c "import sys, json; print(json.load(sys.stdin)['status']['url'])"| tr -d '"' | cut -d "/" -f 3

INFERENCE_SERVICE_NAME = INFERENCE_SERVICE_LIST[0]

INFERENCE_SERVICE_NAME! curl -v -H "Host: $INFERENCE_SERVICE_NAME" -H "Cookie: $COOKIE" "$INGRESS_GATEWAY/v2/models/$MODEL_NAME/infer" -d @./cifar10/kitten.json* Trying 10.100.82.227:80...

* Connected to istio-ingressgateway.istio-system.svc.cluster.local (10.100.82.227) port 80 (#0)

> GET /v2/models HTTP/1.1

> Host: torchserve.kubeflow-user-example-com.emlo.tsai

> User-Agent: curl/7.68.0

> Accept: */*

> Cookie: authservice_session=MTY5NzcyNjMwMXxOd3dBTkVKQk5FaE5UamRIVGtoVFVFVkxSVGN5V2pOQ1IwbEtRMDFWVEZVelNGY3pURFZPVEVFMVJETTBSVkJSV2xsWVRraFpORkU9fCYy8cU5OU4NgGLWxAIPkBfiR9fiGw_jCNZG4h3C_O1-

>

< HTTP/1.1 200 OK

< content-length: 23

< content-type: application/json; charset=UTF-8

< date: Thu, 19 Oct 2023 18:17:18 GMT

< etag: "0b95baa9f82752699748d8353b8c2bd2f839fe98"

< server: envoy

< x-envoy-upstream-service-time: 24

<

* Connection #0 to host istio-ingressgateway.istio-system.svc.cluster.local left intact

{"models": ["cifar10"]}! curl -v -H "Host: $INFERENCE_SERVICE_NAME" -H "Cookie: $COOKIE" "$INGRESS_GATEWAY/v2/models/$MODEL_NAME/infer" -d @./cifar10/kitten.json* Trying 10.100.82.227:80...

* Connected to istio-ingressgateway.istio-system.svc.cluster.local (10.100.82.227) port 80 (#0)

> POST /v2/models/cifar10/infer HTTP/1.1

> Host: torchserve.kubeflow-user-example-com.example.com

> User-Agent: curl/7.68.0

> Accept: */*

> Cookie: authservice_session=MTY5NzcyNjMwMXxOd3dBTkVKQk5FaE5UamRIVGtoVFVFVkxSVGN5V2pOQ1IwbEtRMDFWVEZVelNGY3pURFZPVEVFMVJETTBSVkJSV2xsWVRraFpORkU9fCYy8cU5OU4NgGLWxAIPkBfiR9fiGw_jCNZG4h3C_O1-

> Content-Length: 473057

> Content-Type: application/x-www-form-urlencoded

>

* We are completely uploaded and fine

< HTTP/1.1 200 OK

< content-length: 324

< content-type: application/json; charset=UTF-8

< date: Thu, 19 Oct 2023 18:00:29 GMT

< server: envoy

< x-envoy-upstream-service-time: 389

<

* Connection #0 to host istio-ingressgateway.istio-system.svc.cluster.local left intact

{"id": "6027c8ea-9d50-46a2-ba08-9534d05e430d", "model_name": "cifar10_test", "model_version": "1", "outputs": [{"name": "predict", "shape": [], "datatype": "BYTES", "data": [{"truck": 0.7997960448265076, "car": 0.07536693662405014, "plane": 0.05102648213505745, "frog": 0.04046350345015526, "ship": 0.014834731817245483}]}]}It works!

Notes:

! pip install ipywidgets==8.0.4jupyter nbextension enable --py widgetsnbextensionUninstall Kubeflow

make delete-kubeflow INSTALLATION_OPTION=helm DEPLOYMENT_OPTION=vanillaPyTorch Job in Pipeline

https://github.com/kubeflow/pipelines/blob/master/components/kubeflow/pytorch-launcher/sample.py